7

Chapter 7

Inclusivity Heuristics

The Inclusivity Heuristics are guidelines for designing technology to work well for a diversity of users. Using the heuristics to build inclusive technology is a way to practice inclusive design: it is “a methodology . . . that enables and draws on the full range of human diversity. Most importantly, this means including and learning from people with a range of perspectives” (Microsoft).

The Inclusivity Heuristics, in their current form, give advice for how to support five cognitive facets involved in how people interact with technology for the first time (Burnett et al., 2016).

- Attitude toward risk (risk-averse to risk-tolerant).

- Computer self-efficacy (low to high).

- Information processing style (comprehensive to selective).

- Learning style (process-oriented to mindful tinkering to tinkering).

- Motivations (task-motivated to motivated by tech interest).

A cognitive style is a cognitive facet value. For example, my cognitive styles are medium attitude toward risk, high computer self-efficacy, selective information processing style, a highly variable learning style, and task motivation.

The Inclusivity Heuristics help software practitioners support the full range of cognitive styles for each cognitive facet.

7.1 Background

The Inclusivity Heuristics, also called the Cognitive Style Heuristics or the GenderMag Heuristics (Burnett et al., 2021), were developed by human-computer interaction researchers at Oregon State University as part of the GenderMag Project. The research behind the heuristics is more than 40 publications about gender differences in how people use technology. In the future, the heuristics will potentially expand to include research about other diversity dimensions, such socioeconomic diversity (Hu et al., 2021) and age diversity (McIntosh et al., 2021).

Heuristics, such as the Inclusivity Heuristics and Nielsen’s Heuristics (Nielsen, 1994), are meant to be used within a usability inspection method called heuristic evaluation (Nielsen & Molich, 1990). In a heuristic evaluation, multiple evaluators independently check whether a technology design follows the heuristics. They make note of any issues and compare results. The output is a combined set of usability issues.

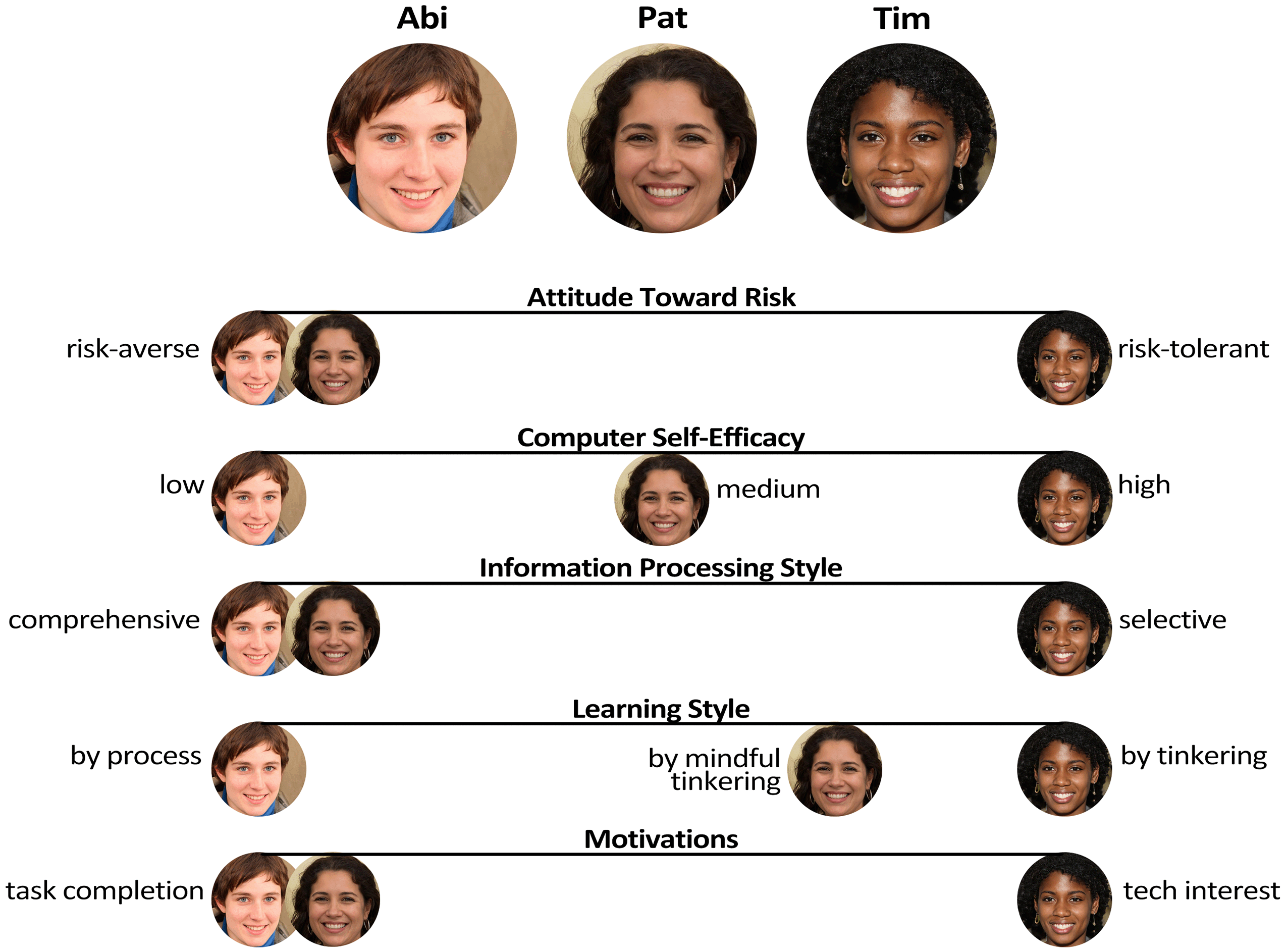

7.2 Inclusivity Heuristics Personas

A unique characteristic of the Inclusivity Heuristics is they are framed from the perspective of supporting three personas: Abi, Pat, and Tim. A persona is a representation of a user or a group of users. Abi, Pat, and Tim each have different cognitive styles. Figure 7.1 lists each persona’s cognitive styles.

Note. The personas can have any gender and picture.

7.3 The Inclusivity Heuristics

Each of the eight heuristics are listed and described below, with examples.

7.3.1 Heuristic #1 (of 8)

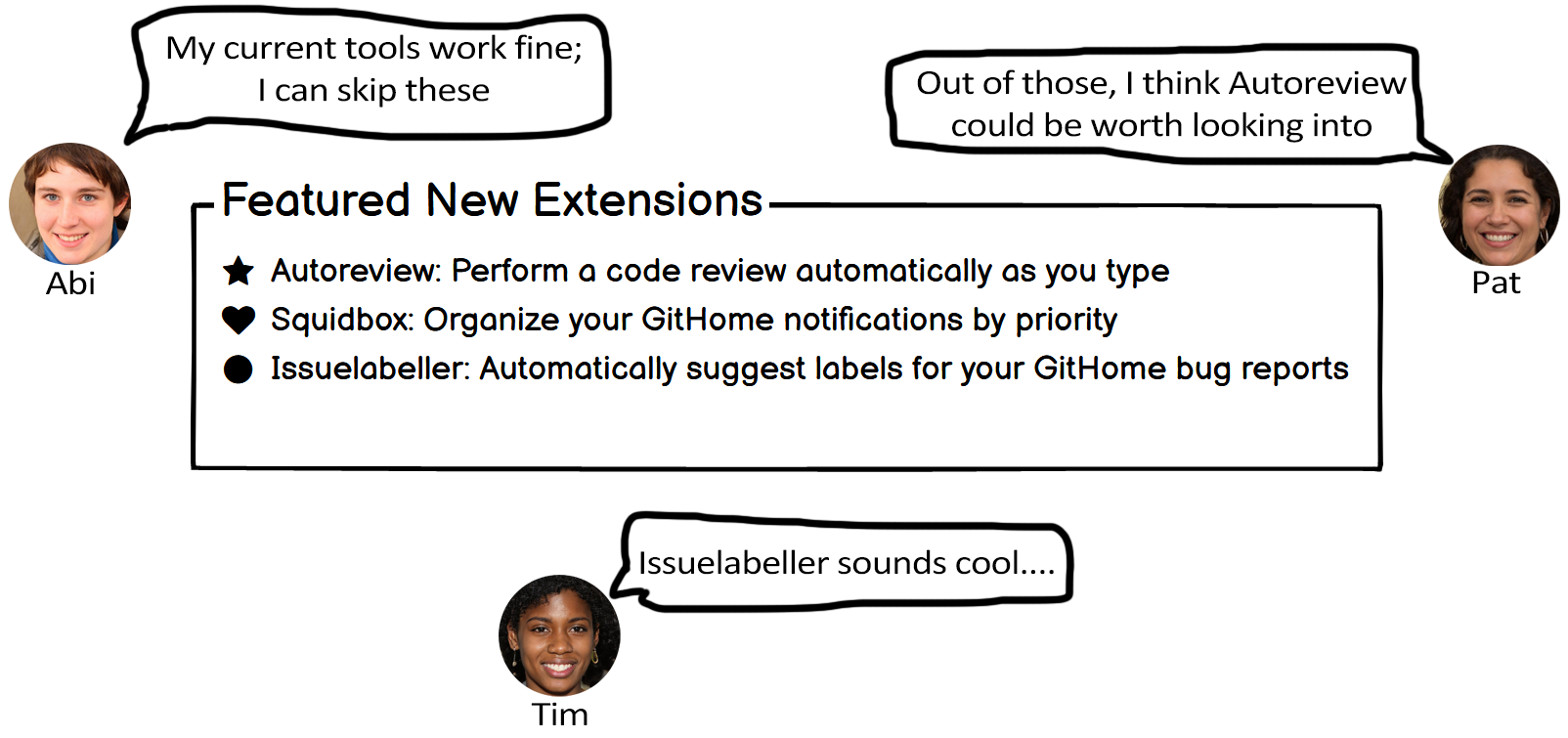

Explain (to Users) the Benefits of Using New and Existing Features

Abi and Pat have a pragmatic approach toward technology, using it only when necessary for their specific tasks. They have limited spare time and prefer to stick to familiar features, enabling them to maintain focus on the task at hand. Unless they can clearly understand how certain features will help them complete their tasks, they might not use them.

Abi is risk-averse toward technology. Abi tends to avoid features with unknown time costs and other risks.

Similarly, Pat is also cautious about using new features, but open to trying out features to determine whether they’re relevant to completing their task.

In contrast, Tim is enthusiastic about discovering and exploring new, cutting-edge features. Moreover, Tim is willing to take risks and may use features without prior knowledge of their costs or even their exact functionality.

Figure 7.2 provides an example design that reflects this heuristic and how Abi, Pat, and Tim might react to it.

Note. The designs help Abi, Pat, and Tim decide whether they want to use the features. Abi and Pat seek features that help them with their task. Tim seeks features that are interesting.

7.3.2 Heuristic #2 (of 8)

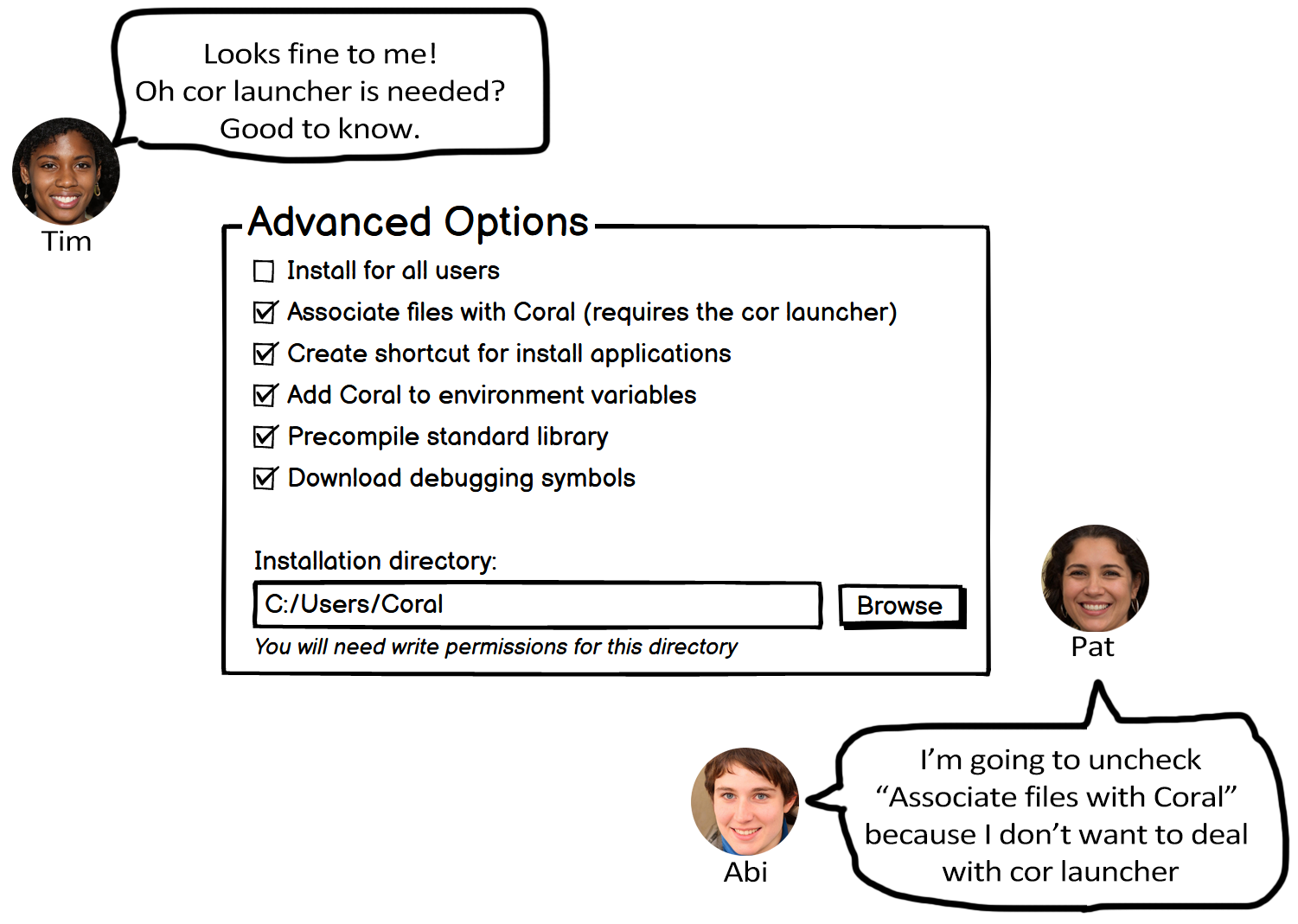

Explain (to Users) the Costs of Using New and Existing Features

Abi and Pat prefer to reduce risk by avoiding features that might require significant time and effort.

Tim is more open to taking risks and may be willing to invest additional time and effort into using features, even if they aren’t directly related to the current task.

Figure 7.3 provides an example design that reflects this heuristic and how Abi, Pat, and Tim might react to it.

Note. Indicating that “cor launcher” is required helps Abi and Pat decide whether they want to proceed or quit, and helps Tim understand what other technical configuration might be required.

7.3.3 Heuristic #3 (of 8)

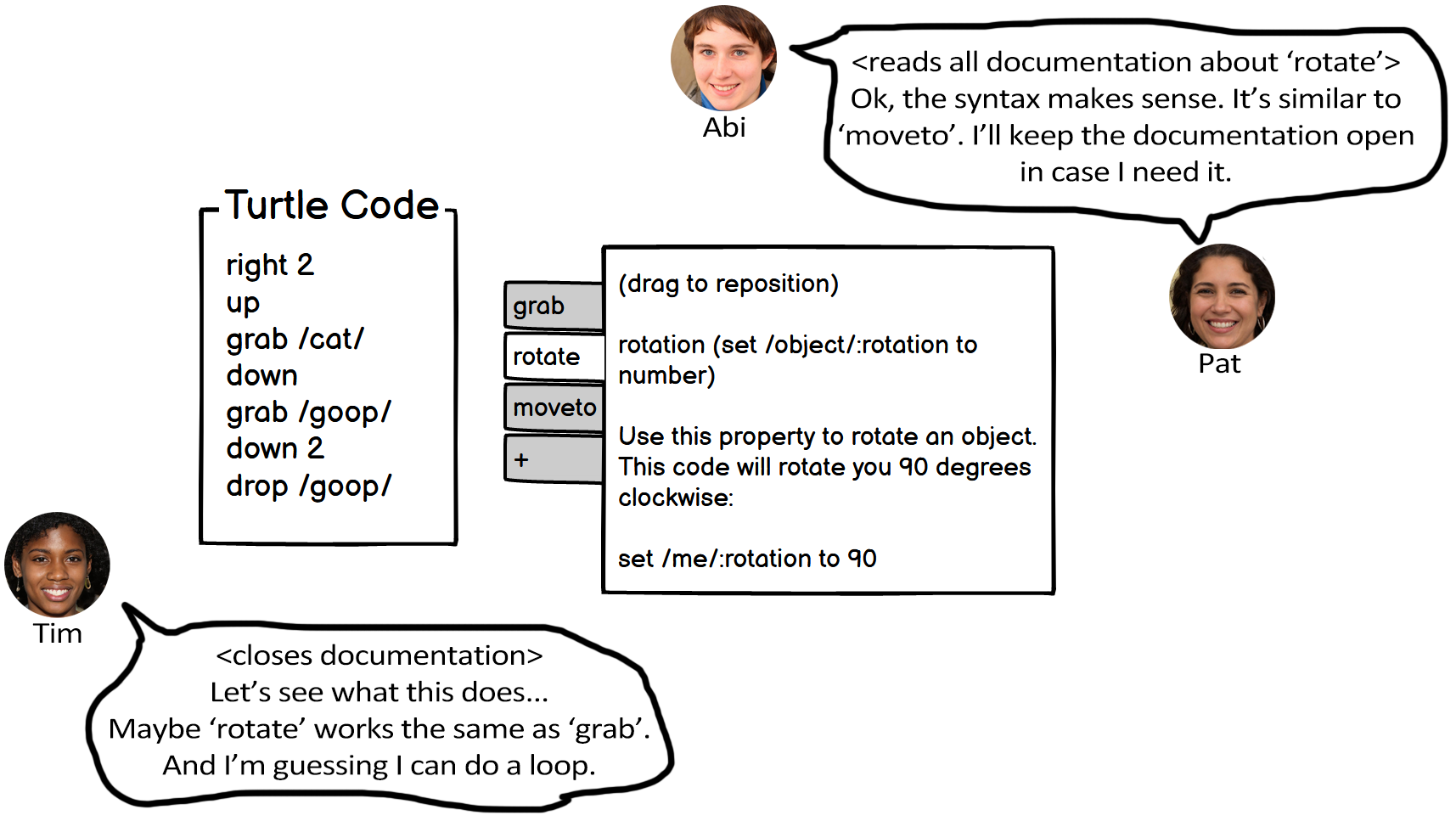

Let Users Gather as Much Information as They Want, and No More Than They Want

Abi and Pat approach decision-making by diligently gathering and thoroughly reviewing relevant information before acting.

Tim prefers to dive right into the first option that catches their interest and pursue it. They will backtrack if necessary.

Figure 7.4 provides an example design that reflects this heuristic and how Abi, Pat, and Tim might react to it.

Note. The design allows users to access documentation, and keep it open, while coding. This helps Abi and Pat fully understand the syntax before using it. Tim can choose to close the documentation.

7.3.4 Heuristic #4 (of 8)

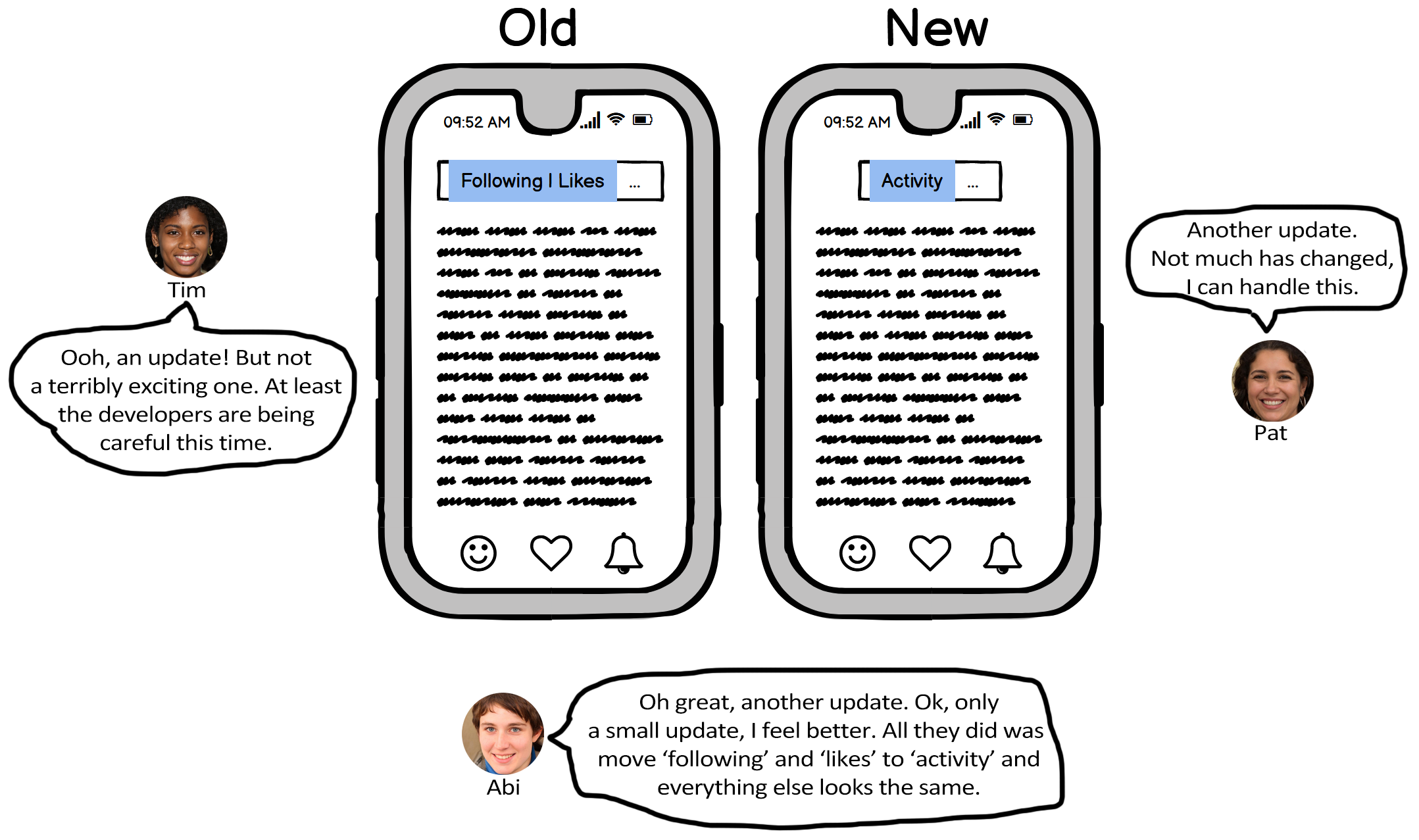

Keep Familiar Features Available

Abi, who has lower computer self-efficacy and is more risk-averse than Tim, tends toward self-blame and will stop using unfamiliar features if problems arise. Abi prefers to avoid potentially wasting time trying to make unfamiliar features work.

Pat, with moderate technological self-efficacy, adopts a different approach. When faced with problems while using unfamiliar features, Pat will attempt alternative methods to succeed for a while. Being risk-averse, however, Pat prefers to rely on familiar features, which are more predictable in terms of expected outcomes and time required.

In contrast, Tim has higher computer self-efficacy and is more risk-tolerant compared to Abi. If problems arise with unfamiliar features, Tim tends to blame the technology itself and may invest considerable extra time exploring various workarounds to overcome the problem.

Figure 7.5 provides an example design that reflects this heuristic and how Abi, Pat, and Tim might react to it.

Note. The design update is minimal, keeping most features the same. This helps Abi and Pat detect the familiar features with which they’re comfortable, and helps Tim detect which features they have already explored.

7.3.5 Heuristic #5 (of 8)

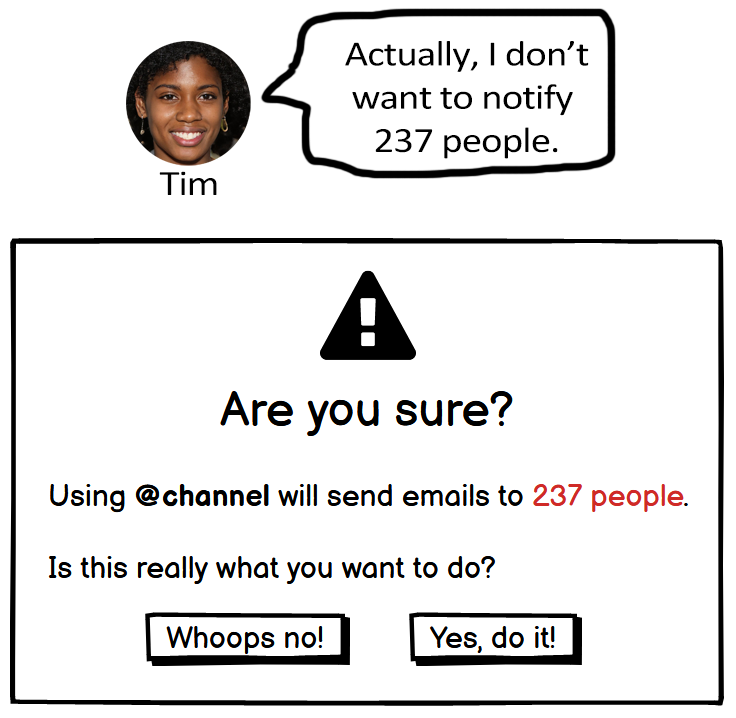

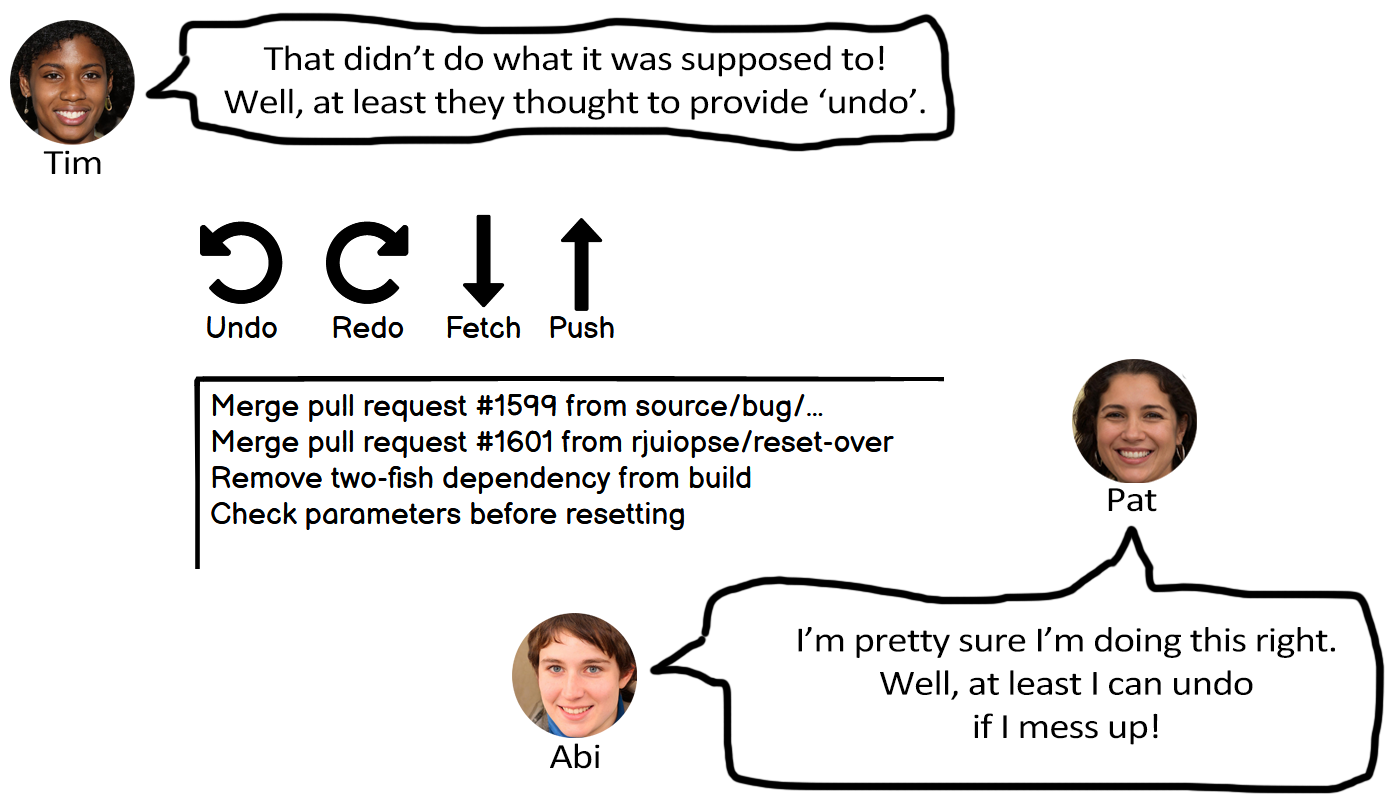

Make Undo/Redo and Backtracking Available

Abi and Pat, being risk-averse, tend to avoid taking actions in technology that may be difficult to undo or reverse. In contrast, Tim, who is risk-tolerant, is willing to take actions in technology that might be incorrect or require reversal.

Figure 7.6 provides an example design that reflects this heuristic and how Abi, Pat, and Tim might react to it.

Note. The design allows users to undo or redo their last action. This helps Abi and Pat feel assured that using the functionality is safe, and helps Tim backtrack in case they make a mistake.

7.3.6 Heuristic #6 (of 8)

Provide an Explicit Path through the Task

Abi, as a process-oriented learner, prefers to approach tasks in a systematic and step-by-step way.

Tim and Pat, however, who are more inclined toward tinkering as their learning style, prefer not to be confined by strict and predetermined processes. They thrive when they have the freedom to explore and experiment without rigid constraints.

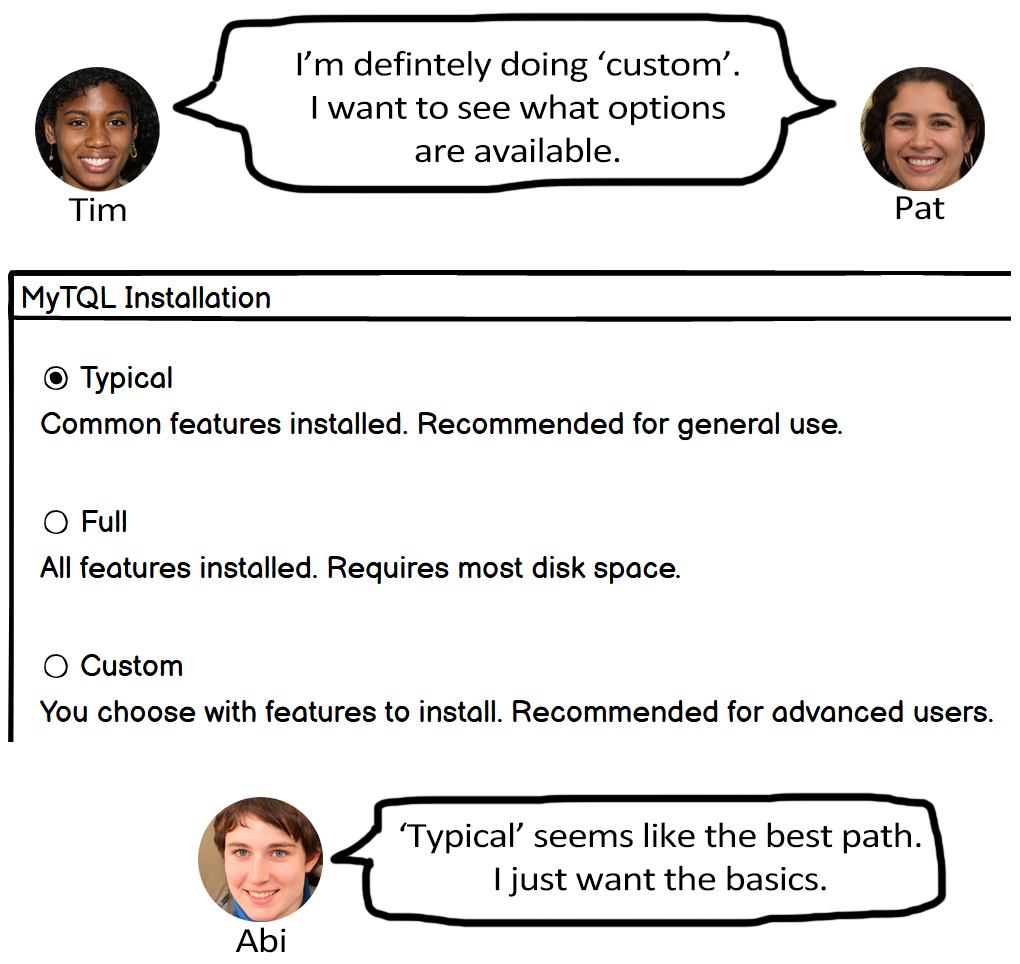

Figure 7.7 provides an example design that reflects this heuristic and how Abi, Pat, and Tim might react to it.

Note. The design gives users a clear choice between three paths. A structured process helps Abi feel comfortable. Pat and Tim can select “custom” if they’d like to tinker.

7.3.7 Heuristic #7 (of 8)

Provide Ways to Try Out Different Approaches

Abi, with lower computer self-efficacy compared to Tim, tends toward self-blame when problems arise in technology. As a result, Abi may stop using the tech altogether.

Pat, with moderate self-efficacy in technology, takes a different approach. When faced with problems while using technology, Pat will attempt alternative methods to succeed for a period.

In contrast, Tim, with higher computer self-efficacy than Abi, tends to blame the technology itself if a problem arises. Tim will then explore numerous workarounds in order to overcome the issue.

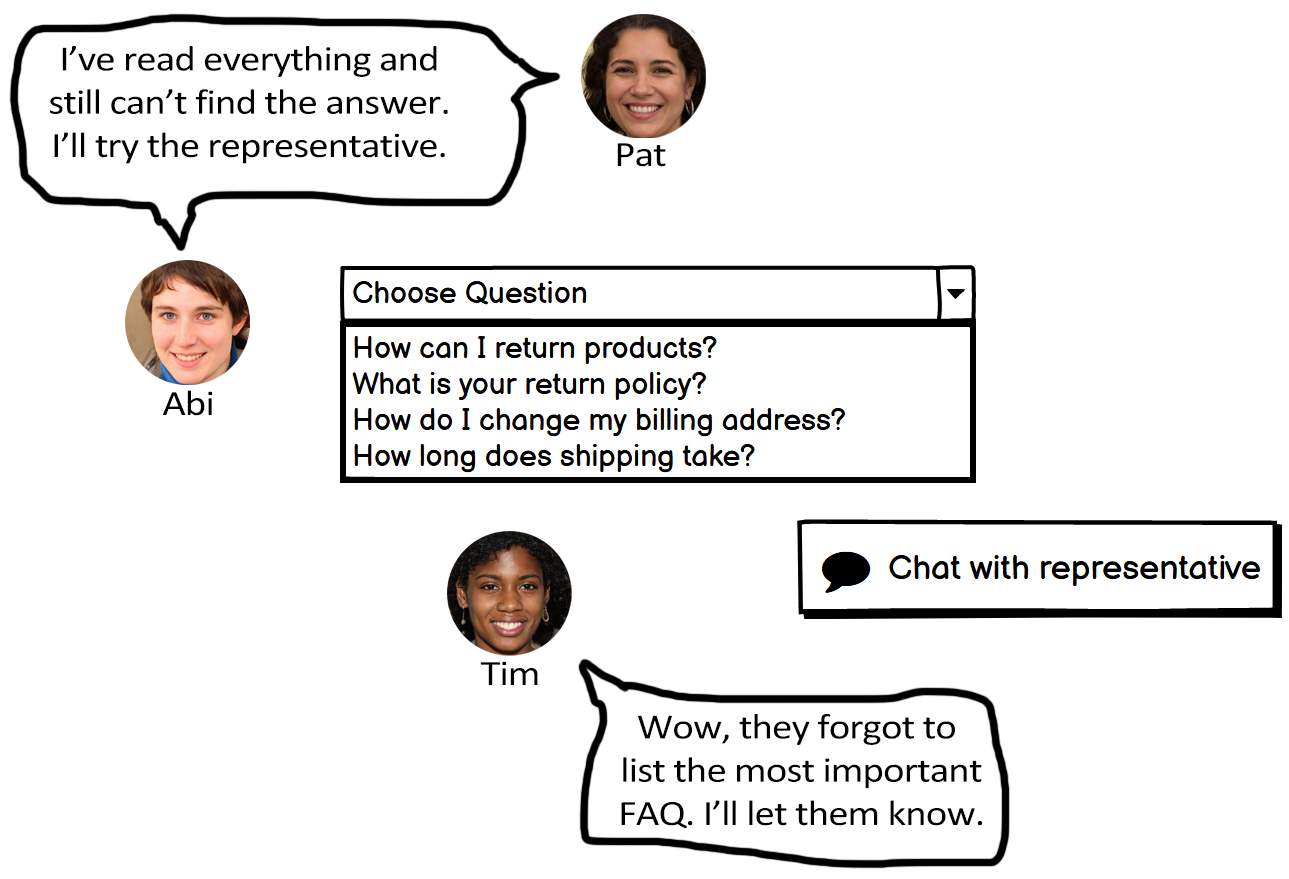

Figure 7.8 provides an example design that reflects this heuristic and how Abi, Pat, and Tim might react to it.

Note. The design allows users to chat with a person in case they can’t find their question on the list. This helps Abi and Pat because they know they have a backup plan. It also helps Tim, who might want to report the problem.

7.3.8 Heuristic #8 (of 8)

Encourage Tinkerers to Tinker Mindfully

Tim’s learning style revolves around tinkering, but at times Tim becomes excessively engrossed in tinkering, leading to long distractions.

Pat, in contrast, embraces a learning approach that involves actively experimenting with new features. Pat does so mindfully, however, taking the time to reflect on each step taken during the learning process.

Figure 7.9 provides an example design that reflects this heuristic and how Tim might react to it.

Note. The design helps Tim avoid making mistakes while tinkering.

7.4 Summary

The Inclusivity Heuristics are a set of eight software usability heuristics for evaluating and improving the usability of UIs across users with different cognitive styles.

- Explain (to Users) the Benefits of Using New and Existing Features

- Explain (to Users) the Costs of Using New and Existing Features

- Let Users Gather as Much Information as They Want, and No More Than They Want

- Keep Familiar Features Available

- Make Undo/Redo and Backtracking Available

- Provide an Explicit Path through the Task

- Provide Ways to Try Out Different Approaches

- Encourage Tinkerers to Tinker Mindfully

References

Burnett, M., Stumpf, S., Macbeth, J., Makri, S., Beckwith, L., Kwan, I., Peters, A., & Jernigan, W. (2016). GenderMag: A method for evaluating software’s gender inclusiveness. Interacting with Computers, 28(6), 760–787. https://doi.org/10.1093/iwc/iwv046

Burnett, M., Sarma, A., Hilderbrand, C., Steine-Hanson, Z., Mendez, C., Perdriau, C., Garcia, R., Hu, C., Letaw, L., Vellanki, A., & Garcia, H. (2021, March). Cognitive style heuristics (from the GenderMag Project). GenderMag.org. https://gendermag.org/Docs/Cognitive-Style-Heuristics-from-the-GenderMag-Project-2021-03-07-1537.pdf

GenderMag Project, Di, E., Noe-Guevara, G. J., Letaw, L., Alzugaray, M. J., Madsen, S., & Doddala, S. (2021, June). GenderMag facet and facet value definitions (cognitive styles). OERCommons.org. https://www.oercommons.org/courses/handout-gendermag-facet-and-facet-value-definitions-cognitive-styles

Hu, C., Perdriau, C., Mendez, C., Gao, C., Fallatah, A., & Burnett, M. (2021). Toward a socioeconomic-aware HCI: Five facets. arXiv preprint arXiv:2108.13477.

McIntosh, J., Du, X., Wu, Z., Truong, G., Ly, Q., How, R., Viswanathan, S., & Kanij, T. (2021). Evaluating age bias in e-commerce. Paper presented at the 2021 IEEE/ACM 13th International Workshop on Cooperative and Human Aspects of Software Engineering (CHASE), Madrid, Spain. https://doi.org/10.1109/chase52884.2021.00012

Microsoft. (n.d.). Microsoft inclusive design. https://inclusive.microsoft.design/

Nielsen, J. (1994). Heuristic evaluation. In Usability inspection methods. John Wiley & Sons.

Nielsen, J., & Molich, R. (1990). Heuristic evaluation of user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems Empowering People—CHI ’90. Association for Computing Machinery. https://doi.org/10.1145/97243.97281

Designing with the goal of increasing usability for traditionally underserved user populations while also increasing usability for mainstream users.

Microsoft. (n.d.). Microsoft inclusive design. https://inclusive.microsoft.design/

Five aspects of users that affect how they solve problems in software: motivations, information processing style, computer self-efficacy, attitude toward risk, learning style.

Burnett, M., Stumpf, S., Macbeth, J., Makri, S., Beckwith, L., Kwan, I., Peters, A., & Jernigan, W. (2016). GenderMag: A method for evaluating software’s gender inclusiveness. Interacting with Computers, 28(6), 760–787. https://doi.org/10.1093/iwc/iwv046

GenderMag Project, Di, E., Noe-Guevara, G. J., Letaw, L., Alzugaray, M. J., Madsen, S., & Doddala, S. (2021, June). GenderMag facet and facet value definitions (cognitive styles). OERCommons.org. https://www.oercommons.org/courses/handout-gendermag-facet-and-facet-value-definitions-cognitive-styles

(Cognitive facet.) How willing a person is to take chances while using technology (risk-tolerant vs. risk-averse).

(Cognitive facet.) A person’s confidence in their ability to use technology (low vs. medium vs. high).

(Cognitive facet.) How a person gathers data in relation to acting on those data (comprehensive vs. selective).

(Cognitive facet.) How a person prefers to move through software (by tinkering vs. by mindful tinkering vs. by process).

(Cognitive facet.) What keeps someone using technology (task completion vs. tech interest).

A person's preferred way of processing (perceiving, organizing and analyzing) information using cognitive mechanisms and structures. They are assumed to be relatively stable. Whilst cognitive styles can influence a person's behavior, depending on task demands, other processing strategies may at times be employed – this is because they are only preferences. Source: Armstrong, S.J., Peterson, E.R., & Rayner, S. G. (2012). Understanding and defining cognitive style and learning style: A Delphi study in the context of educational psychology. Educational Studies, 4, 449-455. https://doi.org/10.1080/03055698.2011.643110

A position on the spectrum of a cognitive facet. Also called a “cognitive style.”

Burnett, M., Sarma, A., Hilderbrand, C., Steine-Hanson, Z., Mendez, C., Perdriau, C., Garcia, R., Hu, C., Letaw, L., Vellanki, A., & Garcia, H. (2021, March). Cognitive style heuristics (from the GenderMag Project). GenderMag.org. https://gendermag.org/Docs/Cognitive-Style-Heuristics-from-the-GenderMag-Project-2021-03-07-1537.pdf

Hu, C., Perdriau, C., Mendez, C., Gao, C., Fallatah, A., & Burnett, M. (2021). Toward a socioeconomic-aware HCI: Five facets. arXiv preprint arXiv:2108.13477.

McIntosh, J., Du, X., Wu, Z., Truong, G., Ly, Q., How, R., Viswanathan, S., & Kanij, T. (2021). Evaluating age bias in e-commerce. Paper presented at the 2021 IEEE/ACM 13th International Workshop on Cooperative and Human Aspects of Software Engineering (CHASE), Madrid, Spain. https://doi.org/10.1109/chase52884.2021.00012

Nielsen, J. (1994). Heuristic evaluation. In Usability inspection methods. John Wiley & Sons.

Any approach in which an evaluator examines a user interface.

A usability inspection method in which evaluators independently examine a design to ensure it aligns with a predetermined set of heuristics, and then compare their findings. Source: Nielsen, J., & Molich, R. (1990). Heuristic evaluation of user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems Empowering People—CHI ’90. Association for Computing Machinery. https://doi.org/10.1145/97243.97281

Nielsen, J., & Molich, R. (1990). Heuristic evaluation of user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems Empowering People—CHI ’90. Association for Computing Machinery. https://doi.org/10.1145/97243.97281

A method that involves utilizing a specialized cognitive walkthrough and customizable personas (Abi, Pat, and Tim) to identify and address gender-inclusivity issues in software, thus improving its overall gender inclusiveness.

Fictitious character created to represent specific user subsets within a target audience. They are commonly used in marketing and user interface design to aid in the concentration on particular groups of users and customers.