21 Bioinformatics Knick-knacks and Regular Expressions

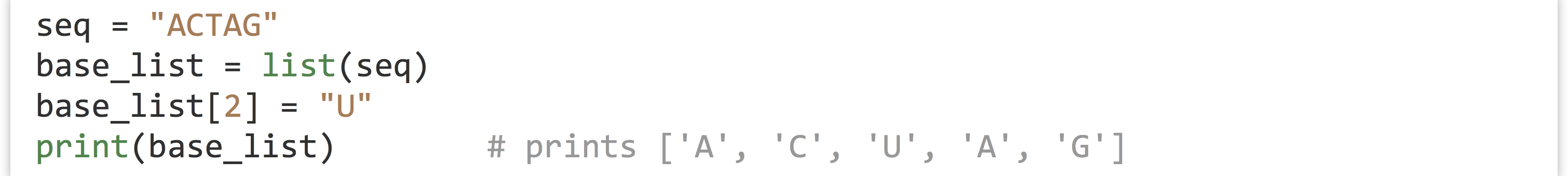

Chapter 14, “Elementary Data Types,” mentioned that strings in Python are immutable, meaning they can’t be modified after they have been created. For computational biology, this characteristic is often a hindrance. To get around this limitation, we frequently convert strings into lists of single-character strings, modify the lists as necessary, and convert the lists of characters back to strings when needed. Python comes built-in with a number of functions that operate on lists and strings useful for computational biology.

Converting a String into a List of Single-Character Strings

Remember that because Python strings are immutable, we can’t run something like

To convert a string into a list of single-character strings, we can pass the string as a parameter to the list() function (which, when called with no parameters, creates an empty list):

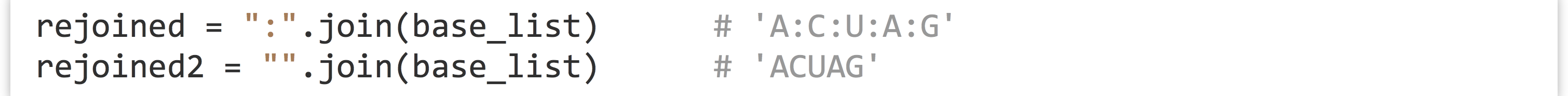

Converting a List of Strings into a String

If we happen to have a list of strings (such as the list of single-character strings above), we can join them into a single string using the .join() method of whatever string we wish to use as a separator for the output string.

The syntax seems a bit backward, "separator".join(list_variable), but it makes sense when considering what the method .-syntax says: it asks the string “separator" to produce a string given the input list list_variable. Because the output is of type string, it makes sense that we should ask a string to do this production.

Reverse a List

Sometimes we may want to reverse the order of a list. We can do this with the list’s .reverse() method which asks the list to reverse itself in place (but returns None).

As with the .sort() and .append() methods from chapter 15, “Collections and Looping, Part 1: Lists and for,” .reverse() returns None, so a line like base_list = base_list.reverse() would almost surely be a bug.

Reverse a String

There are two ways to reverse a string in Python: (1) first, convert it into a list of single-character strings, then reverse the list, then join it back into a string, or (2) use “extended slice syntax.” The former solution uses the methods described above, whereas the second method extends the [] slice syntax so that the brackets can contain values for [start:end:step], where start and end can be empty (to indicate the first and last indices of the string), and step can be -1 (to indicate stepping backward in the string).

Although the extended slice syntax is quite a bit faster and requires less typing, it is a bit more difficult to read. A search online for “python string reverse” would likely reveal this solution first.

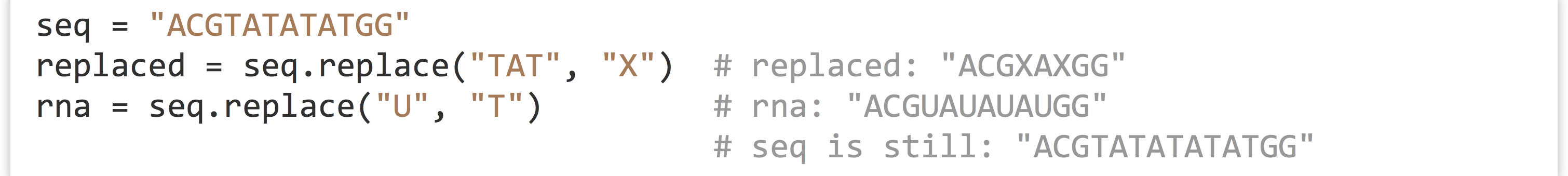

Simple Find and Replace in a String

We’ve already seen how to split a string into a list of strings with the .split() method. A similar method, .replace(), allows us to replace all matching substrings of a string with another string. Because strings are immutable, this method returns a modified copy of the original string.

Also because strings are immutable, the original data are left unchanged. Because a variable is simply a name we use to refer to some data, however, we can make it look like we’ve modified the original string by reassigning the seq variable to whatever is returned by the method.

Commands versus Queries

Why do some operations (like a list’s .sort() method) change the data in place but return None, while others (like a string’s .split() method or the len() function) return something but leave the original data alone? Why is it so rare to see operations that do both? The reason is that the designers of Python (usually) try to follow what is known as the principle of command-query separation, a philosophical principle in the design of programming languages that states that single operations should either modify data or return answers to queries, but not both.

The idea behind this principle is that upon reading code, it should be immediately obvious what it does, a feature that is easily achieved if each operation only has one thing it can do. When operations both change data and return an answer, there is a temptation to “code by side effect,” that is, to make use of simultaneous effects to minimize typing at the cost of clarity. Compared to many other languages, Python makes a stronger attempt to follow this principle.

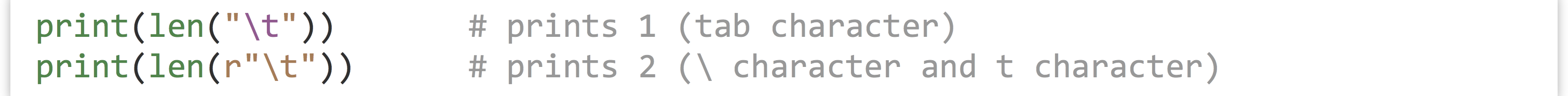

Regular Expressions

Regular expressions, common to many programming languages and even command line tools like sed, are syntax for matching sophisticated patterns in strings. The simplest patterns are just simple strings; for example, "ATG" is the pattern for a start codon. Because Python treats backslashes as special in strings (e.g., "\t" is not actually "\" followed by a "t", but rather a tab character), patterns for regular expressions in Python are usually expressed as “raw strings,” indicated by prefixing them with an r. So, r"ATG" is also the pattern for a start codon, but r"\t" is the regular expression for "\" and "t" rather than the tab character.

Python regular expression functionality is imported with the re module by using import re near the top of the script. There are many functions in the re module for working with strings and regular expression patterns, but we’ll cover just the three most important ones: (1) searching for a pattern in a string, (2) replacing a pattern in a string, and (3) splitting a string into a list of strings based on a pattern.

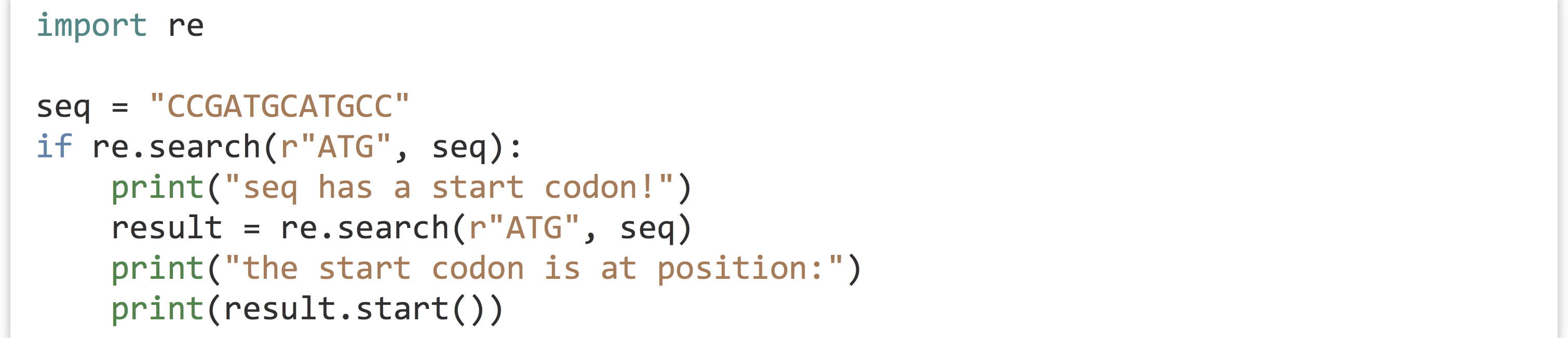

Searching for a Pattern in a String

Searching for a pattern is accomplished with the re.search() function. Most functions in the re module return a special SRE_Match data type, or a list of them (with their own set of methods), or None if no match was found. The if- statement (and while-loops) in Python treats None as false, so we can easily use re.search() in an if-statement. The result of a successful match can tell us the location of the match in the query using the .start() method:

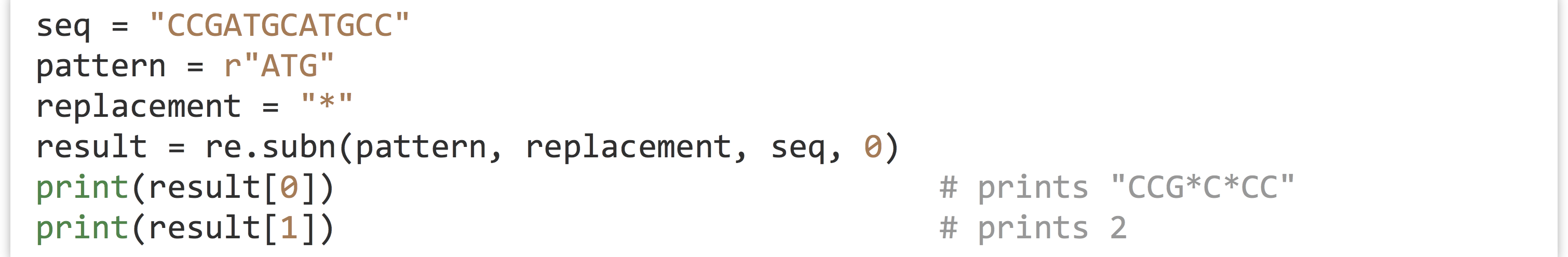

Replace a Pattern in a String

The re.subn() function can be used to search and replace a pattern within a string. It takes at least four important arguments (and some optional ones we won’t discuss here): (1) the pattern to search for, (2) the replacement string to replace matches with, (3) the string to look in, and (4) the maximum number of replacements to make (0 to replace all matches). This function returns a list[1] containing two elements: at index 0, the modified copy of the string, and at index 1, the number of replacements made.

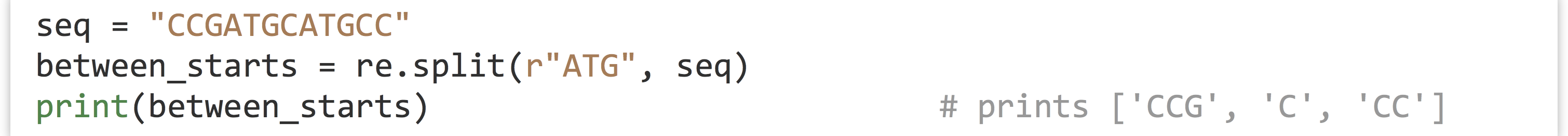

Split a String into a List of Strings Based on a Pattern

We’ve already seen that we can split a string based on simple strings with .split(). Being able to split on complex regular expressions is often useful as well, and the re.split() function provides this functionality.

We’ll leave it as an exercise for the reader to determine what would be output for a sequence where the matches are back to back, or where the matches overlap, as in re.split(r"ATA", "GCATATAGG").

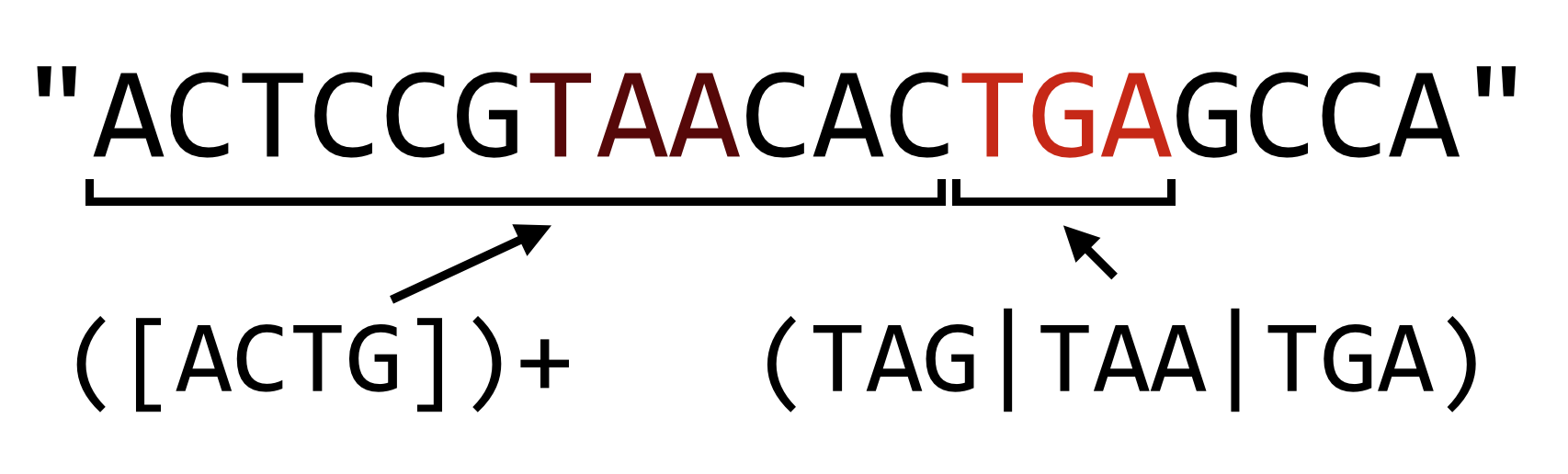

The Language of Regular Expressions, in Python

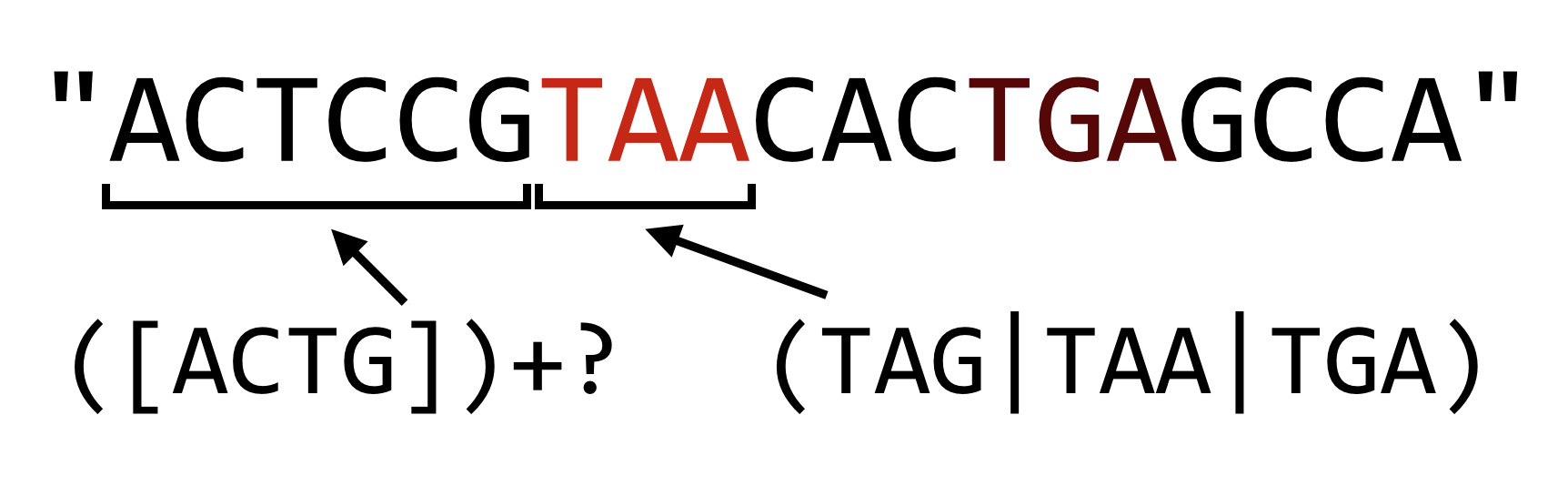

In chapter 11, “Patterns (Regular Expressions),” we covered regular expressions in some detail when discussing the command line regular expression engine sed. Regular expressions in Python work similarly: simple strings like r"ATG" match their expressions, dots match any single character (r"CC." matches any P codon), and brackets can be used for matching one of a set of characters (r"[ACTG]" matches one of a DNA sequence, and r"[A-Za-z0-9_]" is shorthand for “any alphanumeric character and the underscore”). Parentheses group patterns, and the plus sign modifies the previous group (or implied group) to match one or more times (* for zero or more), and vertical pipes | work as an “or,” as in r"([ACTG])+(TAG|TAA|TGA)", which will match any sequence of DNA terminated by a stop codon (TAG, TAA, or TGA). Rounding out the discussion from previous chapters, Python regular expressions also support curly brackets (e.g., r"(AT){10,100}" matches an "AT" repeated 10 to 100 times) and standard notation for the start and end of the string. (Note that r"^([ACTG])+$" matches a string of DNA and only DNA. For more detailed examples of these regular expression constructs, refer to chapter 11.)

The regular expression syntax of Python, however, does differ from the POSIX-extended syntax we discussed for sed, and in fact provides an extra-extended syntax known as “Perl-style” regular expressions. These support a number of sophisticated features, two of which are detailed here.

First, operators that specify that a match should be repeated—such as plus signs, curly brackets, and asterisks—are by default “greedy.” The same is true in the POSIX-extended syntax used by sed. In Python, however, we have the option of making the operator non-greedy, or more accurately, reluctant. Consider the pattern r"([ACTG])+(TAG|TAA|TGA)", which matches a DNA sequence terminated by a stop codon. The greedy part, ([ACTG])+, will consume all but the last stop codon, leaving as little of the remaining string as possible to make the rest of the pattern match.

In Python, if we want to make the plus sign reluctant, we can follow it with a question mark, which causes the match to leave as much as possible for later parts of the pattern.

The reluctance operator can also follow the asterisk and curly brackets, but beware: when used without following one of these three repetition operators, the question mark operator works as an “optional” operator (e.g., r"C(ATG)?C" matches "CC" and "CATGC").

The second major difference between Python (Perl-style) regular expressions and POSIX-extended regular expressions is in how they specify character classes. As mentioned above, a pattern like r"[A-Za-z0-9_]" is shorthand for “any alphanumeric and the underscore,” so a series of alphanumerics can be matched with r"[A-Za-z0-9_]+". In POSIX regular expressions, the character class A-Za-z0-9_ can be specified with [:alnum:], and the pattern would then need to be used like [[:alnum:]]+ in sed.

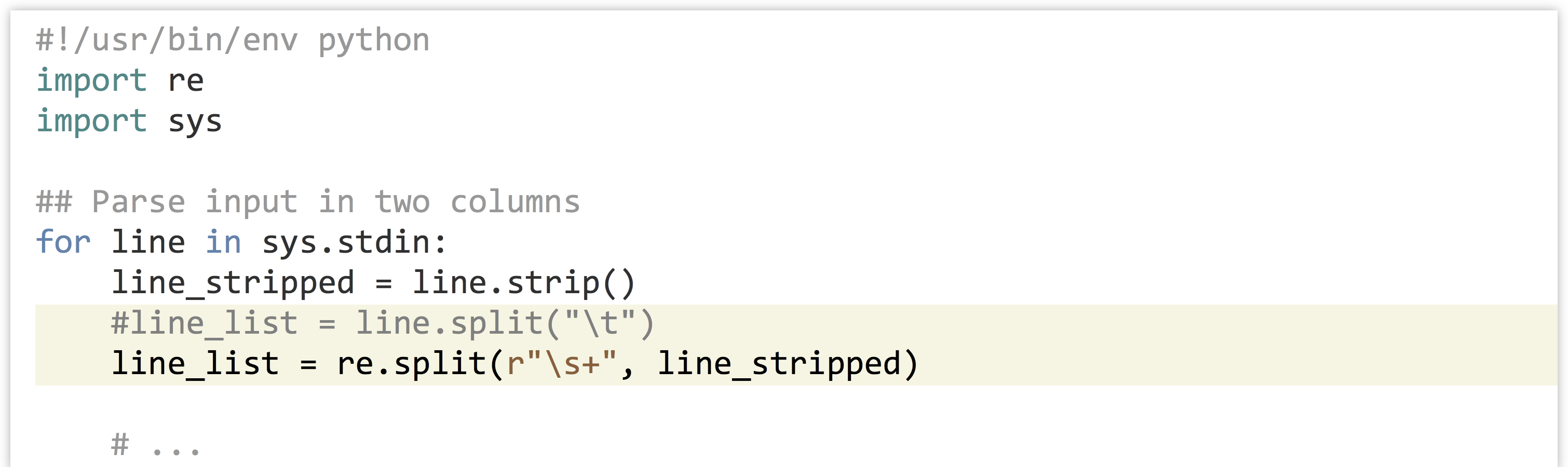

The Perl-style regular expression syntax used by Python introduced a number of shorthand codes for character classes. For example, \w is the shorthand for “any alphanumeric and the underscore,” so r"\w+" is the pattern for a series of these and is equivalent to r"[A-Za-z0-9_]+". The pattern \W matches any character that is not alphanumeric or the underscore, so r"\W+" matches a series of these (equivalent to r"[^A-Za-z0-9_]+"). Perhaps the most important shorthand class is \s, which matches a single whitespace character: a tab, space, or newline character (\S matches any non-whitespace character). This is most useful when parsing input, where it cannot be guaranteed that words are separated by tabs, spaces, or some combination thereof.

In the code above, we’ve replaced the split on "\t" that we’re used to with a re.split() on r"\s+", which will ensure that we correctly parse the pieces of the line even if they are separated with multiple tabs, spaces, or some combination thereof.

Counting Promoter Elements

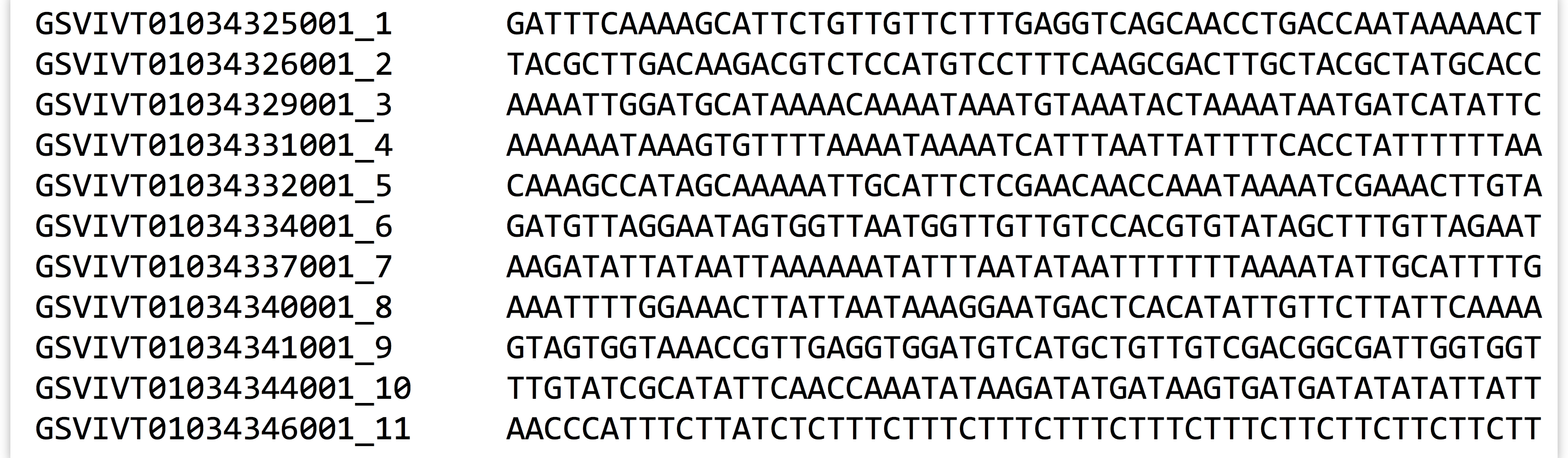

Consider the file grape_promoters.txt, which contains on each line 1000bp upstream of gene regions in the Vitis vinifera genome:

These columns are not separated by a tab character, but rather by a variable number of spaces.

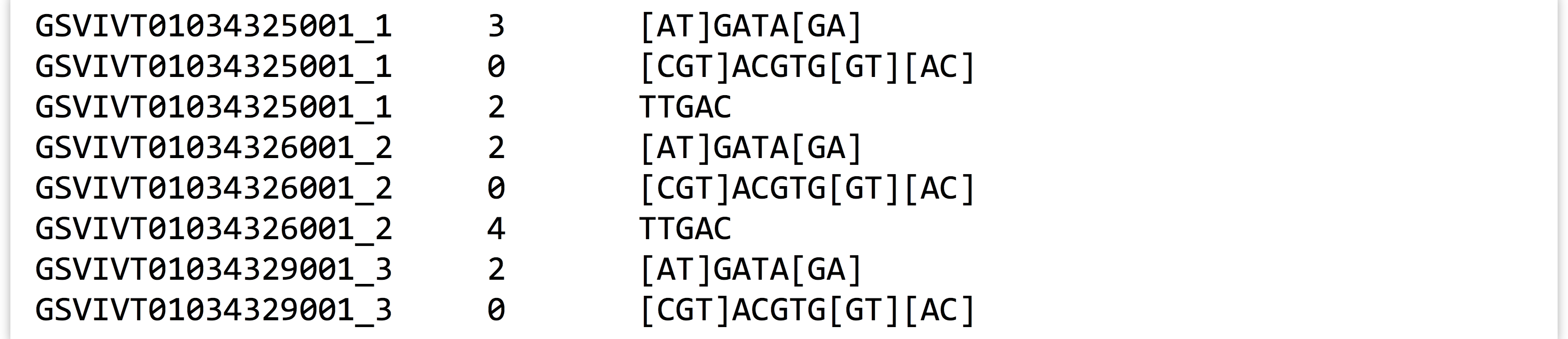

Promoter motifs are small DNA patterns nearby gene sequences to which the cellular machinery binds in order to help initiate the gene-transcription process. For example, the ABF protein binds to the DNA pattern "CACGTGGC" if it is near a gene in some plants. Some motifs are flexible and can be described by regular expressions; the GATA protein binds to any short DNA sequence matching the pattern "[AT]GATA[GA]". We wish to analyze the V. vinifera upstream regions above, and count for each the number of occurrences of the GATA motif. Our output should look like so:

After importing the io and re modules, we’ll first write a function count_motifs() that takes two parameters: first a sequence in which to count motif matches, and second a motif for which to search. There are a variety of ways we could code this function, but a simple solution will be to use re.split() to split the sequence on the motif regular expression—the number of motifs will then be the length of the result minus one (because if no motifs are found, no split will be done; if one is found, one split will be done, and so on).

With this function written and tested, we can open the file using io.open() and loop over each line, calling the count_motifs() function on each sequence with the motif r"[AT]GATA[GA]". Because the columns are separated with a variable number of spaces instead of single tab or space characters, we’ll use re.split() to split each line into pieces.

First, we’ll write and test the function that counts motifs and offers an example usage, where the number of matches should be two.

Next we can finish the program, using re.split() to process each line.

When run, this simple program (grape_count_gata.py) produces the output desired above. Because the output is sent to standard output, we can further filter the results through the sort and head command line utilities to identify which promoters are most likely to be found by GATA: ./grape_count_gata.py | sort -k2,2nr | head -n 10.

Exercises

- Write a function called

reverse_complement()that takes a DNA sequence parameter and returns its reverse complement (i.e., reverses the string, switches A’s and T’s, and switches G’s and C’s). - DNA sequences that are destined to be turned into proteins are “read” by the cellular machinery in one of six “reading frames” with lengths that are multiples of three. The first three are derived from the sequence itself, starting at index

0, index1, and index2; the first three reading frames of"ACTAGACG"are"ACTAGA","CTAGAC", and"TAGACG".To derive reading frames three, four, and five, we first reverse-complement the sequence (

"CGTCTAGT") and then produce frames from indices0,1, and2, resulting in"CGTCTA","GTCTAG", and"TCTAGT".Using the

reverse_complement()function from above (and potentially theget_windows()function from chapter 18, “Python Functions”), write aseq_to_six_frames()function that takes a DNA sequence as a parameter and returns a list of the six reading frames (as strings). - Write a function called

longest_non_stop()that takes a DNA sequence as a parameter and returns the longest amino acid sequence that can be produced from it. This is done by generating the six reading frames from the sequence, converting each to an amino acid sequence (probably using thedna_to_aa()function from chapter 20, “Dictionaries”), and then trimming the resulting sequences down to the first “stop” codon ("*") if there are any.In the DNA sequence

seq = "AGCTACTAGGAAGATAGACGATTAGAC", for example, the six translations areSY*EDRRLD(Frame 1),ATRKIDD*(Frame 2),LLGR*TIR(Frame 3),V*SSIFLVA(Frame 4),SNRLSS**(Frame 5), andLIVYLPSS(Frame 6). In order of lengths, the possibilities are thus"V","SY","LLGR","SNRLSS","ATRKIDD", and"LIVYLPSS". As a result,longest_non_stop(seq)should return"LIVYLPSS". - Modify the

grape_count_gata.pyprogram, calling itmotifs_count.pyso that it can read the file name and motifs to process (the latter as a comma-separated list) onsys.argv. When run as./motifs_count.py grape_promoters.txt [AT]GATA[GA],[CGT]ACGTG[GT][AC],TTGAC, the output should look like:

- Genotyping by sequencing (GBS) is a method for identifying genetic variants in a DNA sample by first splitting chromosomes in pseudorandom locations through the application of restriction enzymes and then sequencing short reads from the ends of the resulting fragments:

In the above, black lines represent input DNA sequence, red lines are cut sites, and green lines represent output from the sequencing machine. (Some simplifications have been made to the figure above for the sake of clarity.) The result is much higher depth of sequencing at fewer locations, which can be useful in a variety of contexts, including looking for genetic variants between different versions of the same chromosome (which requires many reads from the same location to statistically confirm those variants).

In the above, black lines represent input DNA sequence, red lines are cut sites, and green lines represent output from the sequencing machine. (Some simplifications have been made to the figure above for the sake of clarity.) The result is much higher depth of sequencing at fewer locations, which can be useful in a variety of contexts, including looking for genetic variants between different versions of the same chromosome (which requires many reads from the same location to statistically confirm those variants).

The restriction enzymes cut on the basis of patterns; for example, the ApeK1 enzyme cuts DNA molecules at the pattern

"GC[AT]GC". Based on the recognized patterns, some enzymes are “frequent cutters” and some are not; more frequent cutters sample more locations in a genome but sacrifice depth of sequencing. For this reason, researchers often want to know, given an enzyme pattern and genome sequence, the distribution of fragment lengths that will result.Write a function

gbs_cut()that takes a DNA sequence, a regular expression pattern, and a “bin size.” It should return a dictionary mapping sequence lengths, rounded down to the nearest bin size, to the number of fragments produced by the cutter in that bin. As an example,"AAAAGCAGCAAAAAAGCTGCAAGCAGCAAAAA"when processed with"GC[AT]GC"produces fragment sizes of 4, 6, 2, and 5. If grouped in a bin size of 3, the dictionary would have keys0,3, and6, and values1,1, and2.