Public Opinion

13 Does the U.S. Supreme Court Respond to Public Opinion?

Ben Johnson and Logan Strother

Does the Supreme Court respond to public opinion when it decides cases? This question has been the subject of much debate since the nation’s founding, and it is still important today. A nonresponsive Court that imposes its policy preferences on the nation raises concerns about the “countermajoritarian difficulty”—why should an unelected institution get to make binding decisions in a democracy (Bickel 1962)? On the other hand, a responsive Court may not sufficiently protect minority rights in the face of shifting majorities. This is one of the traditional defenses of judicial independence—the inverse of “responsiveness”: a court that is beholden to the public or the legislature cannot be reasonably expected to neutrally enforce the rule of law (Breyer 1995). Alexander Hamilton put it this way in Federalist, no. 78:

The complete independence of the courts of justice is peculiarly essential in a limited Constitution. By a limited Constitution, I understand one which contains certain specified exceptions to the legislative authority; such, for instance, as that it shall pass no bills of attainder, no ex-post-facto laws, and the like. Limitations of this kind can be preserved in practice no other way than through the medium of courts of justice, whose duty it must be to declare all acts contrary to the manifest tenor of the Constitution void. Without this, all the reservations of particular rights or privileges would amount to nothing.

(Federalist, no. 78)

This question of judicial responsiveness is especially important as the Supreme Court plays an increasingly prominent policy-making role in an era of intense partisan polarization (Lee 2015; Keck 2014).

The normative importance of this question aside, whether the Supreme Court responds to public opinion is an empirical question. Getting the answer to this empirical question right tells us which normative problem we face. For a long time, scholars have presented consistent empirical evidence that the Court does, in fact, track the public. In contrast, as we show below, these earlier studies consistently reported such a finding because they did not carefully inspect their data and drew erroneous conclusions based on a time-bound finding. There is actually no good empirical evidence that the Supreme Court systematically responds to the will of the people. While our analysis here certainly cannot (nor does it try to) prove that justices never care about the public’s preferences, we find no evidence to support the alternative proposition, that the Court consistently responds to public opinion.

Why Would the Supreme Court Respond to the Public?

Because the Framers viewed judicial independence as crucial to the rule of law, they wrote several structural protections for judicial independence into the Constitution. Supreme Court justices are appointed rather than elected, and they enjoy lifetime tenure (with the historically rare exception of removal via impeachment). Congress can alter the Court’s jurisdiction, effectively preventing it from deciding certain cases, but it cannot force the Court to decide cases in any particular way.

By all appearances, justices take this structural separation from the public quite seriously. When giving speeches and interviews, justices routinely argue that a fundamental purpose of the Supreme Court is to have a neutral decision-maker that can stand above the fray of partisan politics (Glennon and Strother 2019; Strother and Glennon 2021). Take Justice Jackson’s famous line from his opinion in West Virginia Board of Education v. Barnette: “The very purpose of the Bill of Rights was to withdraw certain subjects from the vicissitudes of political controversy, to place them beyond the reach of majorities” (West Virginia Board of Education v. Barnette 1943, 638). Much more recently, Justice Sotomayor voiced a similar view, but in even stronger terms, in an interview on The Daily Show. When asked by John Stewart whether she or the Supreme Court considers public opinion when deciding cases, Sotomayor answered, “No, I don’t think there’s a judge on my Court and a judge that I’ve met, actually, who responds to public opinion.”[1] These sentiments are supported by the fact that public opinion is rarely mentioned in Supreme Court opinions (Marshall 1989).

Despite these strong institutional protections for judicial independence, many studies in political science have argued that the Supreme Court is in fact influenced by public opinion (Mishler and Sheehan 1993; Flemming and Wood 1997; McGuire and Stimson 2004; Giles, Blackstone, and Vining 2008; Epstein and Martin 2010; Casillas, Enns, and Wohlfarth 2011; Hall 2014; Bryan and Kromphardt 2016; Hall 2018; Black, Owens, Wedeking, and Wohlfarth 2020). Given the discussion above about judicial independence and structural separation, this consistent finding in the literature is striking. How can this be?

Scholars have offered three main theories to try to explain this apparent relationship between public preference and Court outputs. First is the Dahlian, or “Regime,” theory of judicial responsiveness, which holds that each new Supreme Court justice is a creature of the dominant governing coalition that appointed her, because justices are nominated, vetted, and confirmed by the coalition in power (Dahl 1957). On this view, the fact that justices are nominated and confirmed by elected officeholders is sufficient to moor the judiciary to the public will.

The second theory is commonly known as “drift,” which holds that justices’ preferences change over time. The basic idea here is that as time passes fashions evolve and people change, and because justices are people, they may well move along with the general public (Giles, Blackstone, and Vining 2008). Note that both of these theories concern indirect responsiveness, where the relationship between public preferences and the Court’s outputs are incidental, as opposed to intentional.

The third theory, in contrast, concerns direct responsiveness, wherein the justices are thought to be consciously considering and responding to the will of the people. This theory is known as “strategic institutional maintenance,” and it holds that justices respond to the public to protect the Court’s institutional power and legitimacy (Casillas, Enns, and Wohlfarth 2011; Hall 2014). The basic idea is that if the Court bucks the public too often or too much, the public will lose confidence in the Court. This would weaken the Court’s ability to act effectively in the American political system, as the Court relies on its public legitimacy for the efficacy of its decisions (Hall 2014; Strother 2019). That is, outside actors are less apt to comply with decisions handed down by an unpopular Court (Hall 2011).

Even among those who contend that the Court directly responds to the public, however, there is much disagreement as to how and why it does so (Epstein and Martin 2010; Johnson and Strother 2021). The relationship has typically been found to be small (e.g., Stimson et al. 1995), except when it is large (McGuire and Stimson 2004); contemporaneous (Collins and Cooper 2016), except when it requires a significant time-lag (Mishler and Sheehan 1993); that it exists in all issue areas (Bryan and Kromphardt 2016), unless it exists only a few (Link 2015). There is considerable agreement that case salience is a key mediating variable, but wide disagreement over how salience conditions the relationship between public opinion and Court outputs. For example, Casillas, Enns, and Wohlfarth (2011) find that the Court is responsive to the public in nonsalient cases, but not in salient cases, while Hall (2014) and Bryan and Kromphardt (2016) find that the Court is responsive to the public in salient cases but not the nonsalient ones. To further complicate matters, Collins and Cooper (2016) argue that the Court responds to the public in both very high and very low salience cases, but not to cases in the midrange of salience. These things, of course, cannot all be true.

Our goal here is to clear up these assorted disagreements and confusions in the literature. To do so, we go back to the fundamentals of empirical best practice. We look closely at the data and walk step by step through the analytic process. In doing so, we aim to cut through the noise and do justice to this fundamentally important question in American politics.

Research Approach

The wide array of divergent empirical findings in the direct responsiveness literature is especially puzzling given that studies rely on the same data and measures to capture key concepts. This state of affairs indicates that the contrasting findings are most likely attributable to modeling choices; that is, to different functional forms and different sets of control variables. And to be sure, the cutting edge in the literature is typified by complex models with many right-hand-side variables and, frequently, interaction terms. Put more simply, most of the models in this literature are extremely complex—but the complexity is not always well justified, and the possible problems these complexities can create are not often carefully checked. Sophisticated though they may be, these models point us in different directions rather than illuminate a clear path forward.

We believe if the Supreme Court responds to public opinion, then evidence of that relationship should appear in the simplest of models—in the cross-tabs, so to speak. That is, if there is a real relationship between public opinion and Supreme Court outputs, it should be evident before we begin to add variables to the right-hand side of the equation (Achen 2005; Achen 2002; Lenz and Sahn 2020). For these reasons, we will seek evidence of this crucially important theoretical relationship in parsimonious models, beginning with minimal specifications, and working outward from there. This approach will allow us to cut through the noise—that is, the array of divergent findings in the existing literature—and build a solid base for empirical generalization.

Many researchers wrongly assume that adding additional covariates to a statistical model of observational data poses no harm. In reality, however, adding covariates can introduce a variety of biases, including amplification bias, posttreatment bias, and collider bias, among other ills (Montgomery, Nyhan, and Torres 2018; Lenz and Sahn 2020).[2] To address these potential problems, and to advance the cause of transparent science, Lenz and Sahn (2020) argue that researchers should present the minimal specification of their models.[3] And in cases where statistical significance relies on the inclusion of covariates, researchers should very clearly justify each covariate’s inclusion and assess the robustness of the relationship against an array of model specifications.

To summarize, best practices in empirical examination of observational data require us (1) to begin with and to report minimally specified models—that is, models only capturing the core necessary variables of interest—(2) to use only theoretically sound variables; and (3) to examine and validate any findings in all relevant subsets of the data. Scholars of judicial politics should take these fundamental points of empirical practice seriously. For this reason, we take a step back from the cutting edge of research on this topic—that is, from the tendency to assume that the presumed relationship between public opinion and the Supreme Court requires “sophisticated” models with many (many) covariates to be observed—and instead return to basics: Are there meaningful correlations between public mood and the Supreme Court’s outputs? And by the same token, we will add covariates piecemeal, and only when doing so is clearly justified on theoretical grounds, and we will assess the robustness of our findings across a range of measures.

To be clear, the following analyses cannot possibly prove (or disprove) a causal relationship between public opinion and judicial outputs. This is because we have only observational data, and there is no institutional variation or differential “treatment” to exploit that would allow us to do sound causal inference. Instead, we are addressing a more conservative question—but one that actually fits the data: do Supreme Court outputs correlate with public mood? This is a more conservative question in the sense that many relationships (even important ones) are not causal. Therefore, if we find strong evidence of a correlation between public opinion and Supreme Court decisions, that would establish a meaningful correlation, though not necessarily a causal relationship. If, on the other hand, we do not find good evidence of a correlation, then it is much harder, if not impossible, to sustain a theory of a causal relationship between public preferences and judicial decisions. Answering this question—and doing so well—is important, then, because doing so will provide a solid foundation for further work on this important topic.

Analysis

We address this question using the same data and basic approaches that we find throughout the literature. Like all studies in this literature, we draw on the Supreme Court Database to specify the dependent variable—case outcomes—with liberal outcomes coded as one (1) and conservative outcomes as zero (0).[4] To capture our key independent variable, public opinion, we use Stimson’s (1999) “public mood” measure (hereafter, “mood”). The mood measure aims to capture what Stimson calls “policy mood,” which is an estimate of the aggregate liberalism of the American public’s policy preferences derived from mass responses to thousands of survey questions. We begin with only these two variables to specify minimal models, as discussed above. Other theoretically justified variables will be considered and added below; we discuss these as we get to them.

Before we go any further, we discuss two possible empirical strategies. Researchers should make informed judgments about the data-generating process(es) underlying their data. In this case, we have to think about the nature of our two key variables: Supreme Court decisions and public mood. A key question we have to answer is whether we think our observations of these variables are independent over time. In other words, is every term (or case) at the Supreme Court largely independent of the term(s) before it? Is public opinion this month closely related to public opinion last month? And so on. If we think the observations on these variables are closely related to past observations of the same variable (we call this autocorrelation), then we need to adopt a “time-series” research strategy. On the other hand, if we think our observations are largely independent of one another, we would opt for a “cross-sectional” research design. In this body of research, scholars have been pretty divided as to the best approach, with numerous cross-sectional (e.g., Epstein and Martin 2010; Hall 2014) and time-series (e.g., Casillas, Enns, and Wohlfarth 2011; Collins and Cooper 2016) studies. Since our goals are to (1) show there is no good evidence that the Court follows public mood, and (2) to explain how earlier studies mistakenly found such a relationship, we’ll demonstrate both approaches in the following sections.

Cross-Sectional Approach

We start with the cross-sectional approach. Doing so raises another question, though—what is the appropriate unit of analysis? That is, what are the units we should be studying? In this research application, the units could be individual justices’ votes in cases, Supreme Court decisions, or even the term-level aggregate liberalism (i.e., the proportion of the cases in a given term decided in the liberal direction). And again, studies abound at all of these units of analysis. For that reason, we will demonstrate the application of the best practices at both the term level and the decision level.

We will move stepwise through the analysis, following best practices. If we find evidence of a relationship we will rigorously interrogate it by seeing whether it exists in all subsets of the data (to establish that it is not an artifact), and then by seeing whether it persists when we begin to add control variables to the right-hand side of the model. If the relationship persists in all the subsets and in the presence of theoretically justified control variables, only then will we say that the relationship is “robust”—and therefore that it should be believed to be true.

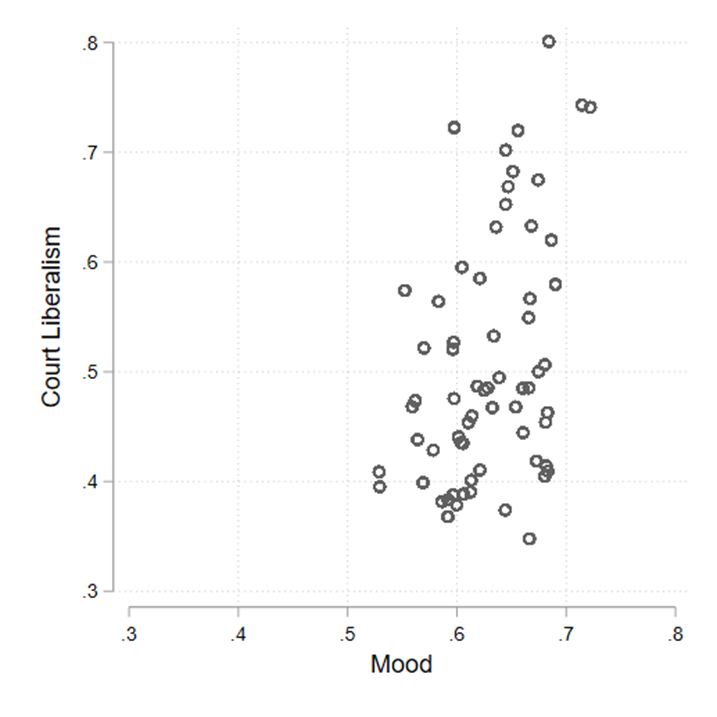

We begin our analysis (as all data analysis should begin) by visually inspecting our data. In figure 1, we scatter the proportion of the Court’s decisions in a given term that was decided in the liberal direction (“Court Liberalism”) against public liberalism (“Mood”), with both variables on the same scale. This simple exercise tells us something important: public mood varies extraordinarily little (from a low of 0.528 to a high of 0.722) over our entire period of study, 1952–2018, while the liberal bent in the Court’s outputs varies quite a bit (low of 0.348, high of 0.801).

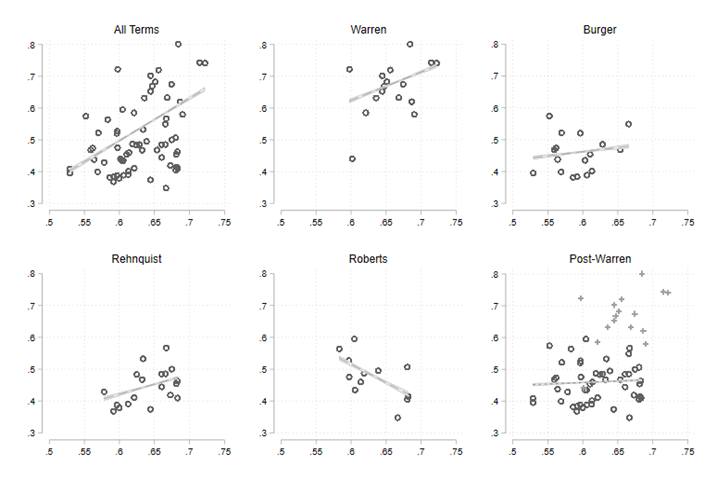

To begin the analysis in earnest, we present the cross-tabs, so to speak, of the term-level correlation that motivated this entire line of research. The subplot at the top left of figure 2 plots the share of cases decided in the liberal direction (as coded in the Supreme Court database) each term against the general liberal mood for that year for the period from 1952 through 2018. This panel shows the strong relationship between public mood and Court output that drives previous studies. Indeed, in a linear probability model, a one percentage point increase in public liberalism correlates with an equal increase in the percentage of cases decided in the liberal direction, with a p-value of 0.001.

Finding a “significant” correlation in the data does not end the inquiry, however. We next seek to validate this empirical observation by examining the relationship within subsets of the data, as suggested by Achen (2002). The findings are presented in the remainder of figure 2. These plots present the term-level correlations within each Chief Justice era. If there is a relationship between the Court—as an institution—and public opinion, there should be a consistent relationship across Chief eras. However, the Chief-era plots give the lie to this expectation. The Warren Court shows a positive (but marginally insignificant, p = 0.073) relationship, the Rehnquist Court exhibits a weakly positive (and marginally significant, p = 0.041) effect, the Burger Court is essentially flat (p = 0.516), and Roberts shows a significant negative correlation (p = 0.034) between public mood and case outcomes, which is entirely antithetical to the strategic institutional maintenance perspective.

Finally, because our Chief Era analyses suggest the clearest relationship during the Warren Court years, we next aggregate all the post–Warren eras (i.e., 1968–2018) in order to see whether there has been any meaningful correlation between public preferences and Court outputs over the last fifty years. The subplot at the bottom right of figure 2 clearly shows that the appearance of a meaningful relationship between mass public opinion and Court output is a function of the Warren Court: the best fit line for the post-Warren period is essentially flat (p = 0.67). This suggests that the relationship suggested by the “All Terms” panel is not robust. It also suggests a good test for models built on institutional maintenance theory: do their results stand up in the post-Warren era?

Several scholars have pointed out that term-level analyses are by their nature quite coarse, and necessarily ignore a myriad of potentially relevant case-level factors (e.g., Epstein and Martin 2010). Thus we turn now to a case-level (but still cross-sectional) analysis.

We estimate a series of linear probability models where the outcome is the direction of the Court’s decision (1 is liberal, 0 is conservative). The key independent variable is, as above, mood. Here we also add some case-specific information: salience. Salience is a latent quality of interest that concerns both how “important” a case is, and how much attention it receives, in some combination. Recall from above that most proponents of strategic institutional maintenance theory argue that salience is a key variable—even if they disagree about the nature of the relationship. The idea is that the salience of a case conditions how justices will act, how likely they are to care what the public thinks with regards to that case. To capture salience, we use data from Clark, Lax, and Rice (2011), which measures media coverage of cases in three newspapers before the decision is rendered (see Strother 2019; Johnson and Strother 2021).

We estimate a series of models in order to ease the interpretation of the key relationship of interest. Here, each model takes the basic form:

[latex]\text{decisionDirection} \sim \beta_1 \text{Mood} + \beta_2 \text{MidSalience} + \beta_3 \text{LowSalience} + \beta_4 \text{Mood} \times \text{MidSalience} + \beta_5 \text{Mood} \times \text{LowSalience} + \alpha + \epsilon[/latex]

In each model, we are interested in the coefficient β1. In the example model just above, β1 is the total effect of mood in high salience cases.[5] To capture the total effect for midsalience cases, we replace MidSalience with HighSalience in the model; for low-salience cases, we swap out LowSalience for HighSalience. We estimate these models for the full dataset, and then as above, for each Chief Justice era, and then for the post-Warren period.

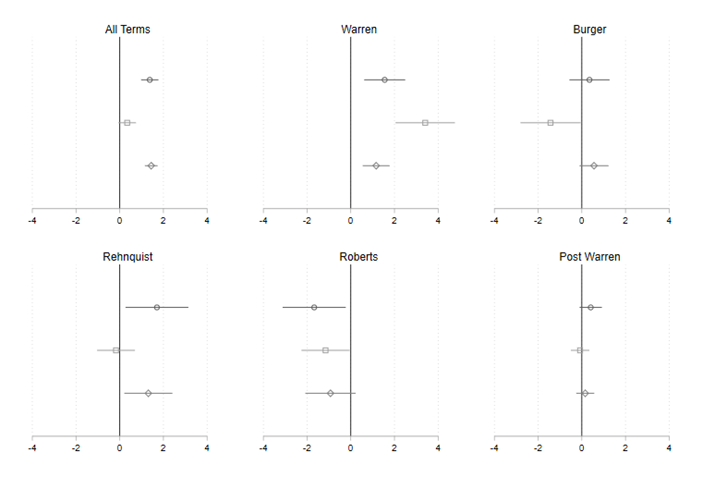

Figure 3 depicts the findings graphically (we only show the key variable of interest, mood, for the sake of presentation). These figures are coefficient plots, which plot the point estimate (the shape) and the 95 percent confidence interval (the bars) for each model. If the error bars do not cross zero (the thick vertical line) then we would say the relationship is “statistically significant” at the conventional p < 0.05 level. If the error bars do include the vertical zero-line, however, then we would say the relationship cannot be distinguished from zero (or that it is not statistically significant). The subplot at the top left again is consistent with the typical finding in the literature, of salience in high and low salience cases showing up as highly statistically significant. However, when we check in the subsets of the data, we see tremendous heterogeneity. The model for the Warren Court era shows across-the-board correlations, with the strongest relationship appearing in midsalience cases. In the Burger era, we find no evidence of correlation in high or low salience cases, but a significant negative correlation appears in cases of midsalience. The Rehnquist Court looks much more like what the strategic institutional maintenance theorists would suggest, though the relationship is only modestly significant. The Roberts Era again bucks the trend, with significant negative correlations in high and midsalience cases, and a negative though marginally insignificant correlation in low salience cases. There is no theory of Supreme Court responsiveness that would purport to predict this odd array of findings. Institutional Maintenance Theory, specifically, would predict a consistent pattern in every era over time. The data do not support this expectation. Finally, we again estimate the model considering the full post-Warren period (presented in the subplot at bottom right) and find tight nulls in all three levels of salience.[6]

Note: Point estimates with 95% confidence intervals. In all subplots, the circle (○) at top denotes Mood in high salience cases, the square (□) at center denotes Mood in medium salience cases, and the diamond (◊) at bottom denotes Mood in low salience cases.

In summary, we find no evidence to support the claim that the Supreme Court is responsive to public opinion in these cross-sectional analyses. Contrary to many existing studies, we find no significant correlation between public mood and the Court’s term-level outputs (i.e., the proportion of decisions in a given term rendered in the liberal direction) or at the level of individual decisions. This finding remains when we consider the potential mediating variable, salience. It seems likely that prior studies have reached errant conclusions because they did not consider how the correlations they observed might be time bound. That is, those findings are almost certainly driven by the Warren Court and not reflective of a larger pattern in the data. Or to put it a little differently, there is no evidence that the “relationship” identified in the literature, and shown here in our “All Terms” analyses, is robust and meaningful.

Time-Series Approach

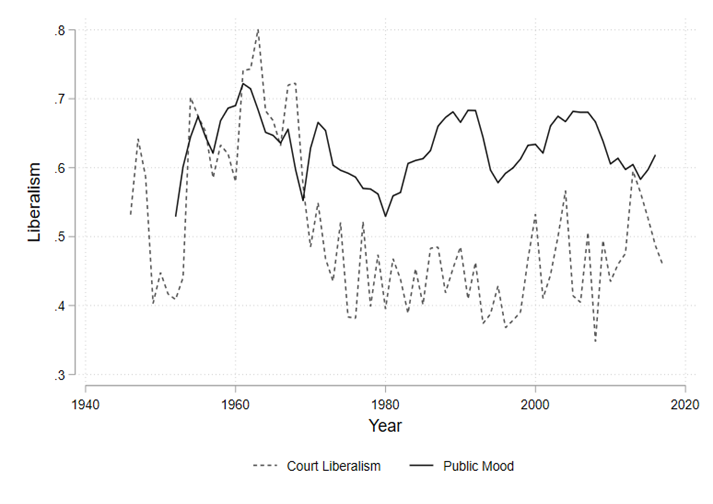

Of course, it is possible that this cross-sectional approach is not the best one to identify the relationship of interest. Many studies in this literature have taken a time-series approach (e.g., Mishler and Sheehan 1993; Casillas, Enns, and Wohlfarth 2011; Collins and Cooper 2016). Time-series approaches allow us to consider time trends in the relationship, the possibility that the Court responds after a lag (as opposed to contemporaneously), and the like. For these reasons, the next portion of our analysis takes time seriously. First, as above, we simply plot the data and visually inspect it. Figure 4 plots both public mood and Supreme Court liberalism against time. Again, we see that there is far more variation in Court outputs than in public liberalism—but the eyeball test suggests that the two may move somewhat in concert. And note the especially tight apparent correlation during the Warren Court (1954–68).

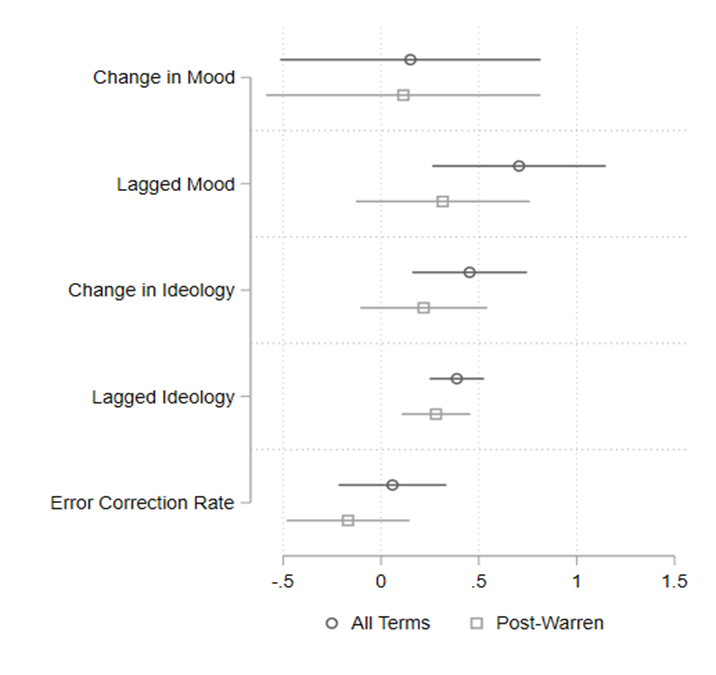

From here, we move on to more exacting tests than the eye can perform. We estimate a series of general error correction models, where the outcome is term-level Court liberalism, and the key independent variables capture public mood—both contemporaneous and lagged. Additionally, we control for Supreme Court ideology, as justices’ tendency to vote ideologically is among the most well-established in all of judicial politics (Segal and Spaeth 2002). In doing so, we are estimating what you can think of as the minimum theoretically justified model. We specify Supreme Court ideology in these models using the Segal-Cover score of the median justice on the Court for a given term (Segal Cover 1989). Finally, the model includes the “error correction rate” (the lag of the dependent variable) on the right-hand side of the equation. The outputs of these models are presented graphically in figure 5. We first estimate the model for all the Court terms in our dataset. As the figure shows, when considering the full time range, we find that “lagged” (i.e., last year’s) public mood correlates significantly with Court liberalism. Building on our analyses above, though, we next consider whether this correlation holds when we exclude the Warren Court. The model for the post-Warren years provides no evidence of a correlation between mood and Court outputs. As such, these time-series analyses are consistent with the cross-sectional analysis presented above; there is nothing to see after the Warren era. We have found no solid evidence that the Court responds to public opinion.

Note: Point estimates with 95% confidence intervals.

Conclusion

The Constitution creates strong protections for judicial independence. By design, tenure during good behavior, salary protection, and the appointments process all insulate the judiciary from public pressure. Given the structural protections guarding the Supreme Court, it would be surprising if the Court did, in fact, follow the public. An important question in judicial politics scholarship, then, is whether these constitutional protections are “working.” The answer to this question points us to which normative question we face: can the Court be democratically legitimate? If the Court follows the public, can it effectively protect minorities?

Many scholars contend that constitutional protections for judicial independence have not insulated the Court from the public. That is, they reject a strong account of judicial independence and contend that despite the Court’s structural protections, the justices hew to the public will to protect their institutional legitimacy. These theories have been supported by a large number of studies reporting significant correlations between the liberal tendencies of the public and Supreme Court decisions. The regularity of these findings has established something of a consensus that public opinion directly influences Court’s work. If these scholars are right, the concern that the Court may not be able to protect the rights of unpopular minorities against majoritarian abuse is legitimated.

By going back to the basics—looking carefully at the data, checking in the subsets, assessing robustness, and so on—we show here that there is actually very little evidence that the Supreme Court, as an institution, responds to public preferences. That is, our empirical results show that there is no solid empirical basis for the common claim that the Court follows public opinion—at least over the last fifty years.[7]

Learning Activity

What’s Wrong with the Warren Court?

In the chapter, we argued that there is no good evidence that the Supreme Court, as an institution, responds to public opinion and that prior studies have erroneously concluded the opposite because of a correlation driven by the Warren Court (recall especially figure 2 and the associated discussion).

But this raises a new question: Was the Warren Court responsive to public opinion? It is possible, for one reason or another—perhaps the fact that Earl Warren himself was a prominent politician before joining the Court—that the Warren Court was an outlier that did, in fact, care about the will of the people.

Alternatively, it is possible that the Warren Court was simply a very liberal Court that happened to be serving when the public was also very liberal. That is, that the liberalism of the Court and public are correlated, but not causally related.

Note that this is an important empirical question. For this activity, your job is to try to answer that question: Was the Warren Court responsive to the public?

To answer the questions below, you first need to download R and RStudio. R is a powerful open-source program for statistics and data analysis. RStudio is free software that provides a nice-looking console for working in R. For information on installing R and RStudio in Windows or in Mac OS X see one of the following links:

- https://www.andrewheiss.com/blog/2012/04/17/install-r-rstudio-r-commander-windows-osx/

- https://owi.usgs.gov/R/training-curriculum/installr/

- https://cran.r-project.org/doc/manuals/r-release/R-admin.html

Once you have R and RStudio installed on your computer, download and open the .R file (“Warren_PublicOpinion.R”) and the data file (“Warren_PublicOpinion.csv”) below. Note that you will need to save the .R file and the data file in the same folder on your computer. Next, set the working directory of your RStudio session to the folder where you saved the .R file and data file. To do so, open RStudio, click “session” on the menu near the top of the screen, scroll down to “set working directory,” and click “choose directory” (i.e., Session > Set Working Directory > Choose Directory).

Once the working directory is set, you can click and highlight sections of the code in the scripting window and click the “run” button in the top left section of the window. There are notes in the code that will guide you through the exercise.

Questions

- Do you think the Warren Court was responsive to public opinion? Why or why not?

- Do you observe a constant relationship between opinion and outputs during the Warren years?

- You identified an “outlier” year in the data. What do you think caused that one year to be so different from all the other observations in the Warren era?

References

Achen, Christopher H. 2005. “Let’s Put Garbage-Can Regressions and Garbage-Can Probits Where They Belong.” Conflict Management and Peace Science 22 (4): 327–39.

———. 2002. “Toward a New Political Methodology: Microfoundations and ART.” Annual Review of Political Science 5:423–50.

Bickel, Alexander M. 1962. The Least Dangerous Branch: The Supreme Court at the Bar of Politics. New Haven, CT: Yale University Press.

Black, Ryan C., Ryan J. Owens, Justin Wedeking, and Patrick C. Wohlfarth. 2020. The Conscientious Justice. Cambridge: Cambridge University Press.

Breyer, Stephen G. 1995. “Judicial Independence in the United States.” St. Louis University Law Journal 40:989.

Bryan, Amanda C., and Christopher D. Kromphardt. 2016. “Public Opinion, Public Support, and Counter-Attitudinal Voting on the US Supreme Court.” Justice System Journal 37 (4): 298–317.

Casillas, Christopher, Peter K. Enns, and Patrick C. Wohlfarth. 2011. “How Public Opinion Constrains the US Supreme Court.” American Journal of Political Science 55 (1): 74–88.

Clark, Tom S., Jeffrey R. Lax, and Douglas Rice. 2015. “Measuring the Political Salience of Supreme Court Cases.” Journal of Law and Courts 3 (1): 37–65.

Clarke, Kevin A. 2005. “The Phantom Menace: Omitted Variable Bias in Econometric Research.” Conflict Management and Peace Science 22 (4): 341–52.

Collins, Todd A., and Christopher A. Cooper. 2016. “The Case Salience Index, Public Opinion, and Decision Making on the US Supreme Court.” Justice System Journal 37 (3): 232–45.

Dahl, Robert A. 1957. “Decision-Making in a Democracy: The Supreme Court as a National Policy-Maker.” Journal of Public Law 6:279–95.

Epstein, Lee, and Andrew D. Martin. 2010. “Does Public Opinion Influence the Supreme Court? Possibly Yes (but We’re Not Sure Why).” University of Pennsylvania Journal of Constitutional Law 13 (2): 263–81.

Epstein, Lee, and Jeffrey A. Segal. 2000. “Measuring Issue Salience.” American Journal of Political Science 44 (1): 66–83.

Flemming, Roy B., and B. Dan Wood. 1997. “The Public and the Supreme Court: Individual Justice Responsiveness to American Policy Moods.” American Journal of Political Science 41 (2): 468–98.

Giles, Michael W., Bethany Blackstone, and Richard L. Vining. 2008. “The Supreme Court in American Democracy: Unraveling the Linkages between Public Opinion and Judicial Decision Making.” Journal of Politics 70 (2): 293–306.

Glennon, Colin, and Logan Strother. 2019. “The Maintenance of Institutional Legitimacy in Supreme Court Justices’ Public Rhetoric.” Journal of Law and Courts 7 (2): 241–61.

Hall, Matthew E. K. 2011. The Nature of Supreme Court Power. Cambridge: Cambridge University Press.

———. 2014. “The Semiconstrained Court: Public Opinion, the Separation of Powers, and the U.S. Supreme Court’s Fear of Nonimplementation.” American Journal of Political Science 58 (2): 352–66.

———. 2018. What Justices Want: Goals and Personality on the U.S. Supreme Court. Cambridge: Cambridge University Press. Hamilton, Alexander. 1788. The Federalist, no. 78.

Johnson, Ben, and Logan Strother. 2021. “The Supreme Court’s (Surprising?) Indifference to Public Opinion.” Political Research Quarterly 74 (1): 18–34.

Keck, Thomas M. 2014. Judicial Politics in Polarized Times. Chicago: University of Chicago Press.

Lee, Frances E. 2015. “How Party Polarization Affects Governance.” Annual Review of Political Science 18:261–82.

Lenz, Gabriel, and Alexander Sahn. 2020. “Achieving Statistical Significance with Control Variables and without Transparency.” Political Analysis, November 16, 2020. https://doi.org/10.1017/pan.2020.31.

Marshall, Thomas R. 1989. Public Opinion and the Supreme Court. UK: Unwin-Hyman.

McGuire, Kevin T., and James A. Stimson. 2004. “The Least Dangerous Branch Revisited: New Evidence on Supreme Court Responsiveness to Public Preferences.” Journal of Politics 66 (4): 1018–35.

Mishler, William, and Reginald S. Sheehan. 1993. “The Supreme Court as a Countermajoritarian Institution? The Impact of Public Opinion on Supreme Court Decisions.” American Political Science Review 87 (1): 87–101.

———. 1996. “Public Opinion, the Attitudinal Model, and Supreme Court Decision Making: A Micro-Analytic Perspective.” Journal of Politics 59 (4): 1114–42.

Montgomery, Jacob M., Brendan Nyhan, and Michelle Torres. 2018. “How Conditioning on Posttreatment Variables Can Ruin Your Experiment and What to Do About It.” American Journal of Political Science 62 (3): 760–75.

Segal, Jeffrey A., and Albert D. Cover. 1989. “Ideological Values and the Votes of US Supreme Court Justices.” American Political Science Review 83 (2): 557–65.

Segal, Jeffrey A., and Harold J. Spaeth. 2002. The Supreme Court and the Attitudinal Model Revisited. Cambridge: Cambridge University Press.

Spaeth, Harold J., Lee Epstein, et al. 2019. Supreme Court Database, Version 2019 Release 1. http://Supremecourtdatabase.org.

Stimson, James A. 1999. Public Opinion in America: Moods, Cycles, and Swings. Boulder, CO: Westview.

Stimson, James A., Michael B. MacKuen, and Robert S. Erikson. 1995. “Dynamic Representation.” American Political Science Review 89 (3): 543–65.

Strother, Logan. 2017. “How Expected Political and Legal Impact Drive Media Coverage of Supreme Court Cases.” Political Communication 34 (3): 571–89.

———. 2019. “Case Salience and the Influence of External Constraints on the Supreme Court.” Journal of Law and Courts 7 (1): 129–47.

Strother, Logan, and Colin Glennon. 2021. “An Experimental Investigation of the Effect of Supreme Court Justices’ Public Rhetoric on Judicial Legitimacy.” Law & Social Inquiry 46 (2): 435–54.

West Virginia State Board of Education v Barnette 319 US 624 (1943).

- Sonia Sotomayor, interview by John Stewart, The Daily Show, January 21, 2013, https://www.cc.com/video/a1f1ob/the-daily-show-with-jon-stewart-exclusive-sonia-sotomayor-extended-interview-pt-3. ↵

- These assorted biases can cause you to draw faulty inferences from your analyses. ↵

- Minimal specifications have to be balanced against concerns of omitted variable bias—that is, bias that comes from correlation between the dependent variable, one or more independent variables, and the error term. Such bias arises in practice when a model when a determinant of the DV is omitted from the model. To address this, we discuss what we call the minimal theoretically justified model in the sections below. See Clarke (2005) for discussion on dealing with omitted variable bias in empirical practice. ↵

- Note that this means when we aggregate to the term level, as we do in some of the analyses below, that higher values will correspond to more liberal terms, and low values to more conservative terms. ↵

- The α is a constant (or “intercept”) term and ε is the error term. ↵

- In Johnson and Strother (2021) we show that this (non)pattern holds when we control for Supreme Court ideology, and when we focus on the subset of the Court’s decisions reversing lower Court decisions. Adding ideology to the model described above (i.e., inserting the term β6Ideology) brings us to what might be thought of as the “theoretical” minimal model, because it is the minimally specified model that accounts for all theoretically relevant variables—since virtually all judicial politics scholars agree that justice ideology shapes Supreme Court decision-making. In Johnson and Strother (2021) we show that all the analyses we describe here hold true when we account for this theoretically important variable. ↵

- In other work, we show that this is true across three units of analysis, a variety of measures of public preferences and case salience, and among the subset of cases in which the Court reversed lower court decisions. Replications of prior studies further reinforce these conclusions. See Johnson and Strother (2021). ↵