Section 2 – Where change is unlikely

Somethings do not change. Consider the humble triangle. For all triangles including a right angle, formula a^2 + b^2 = c^2. For Euclidian geometries, this formula describes the length of the sides of that triangle. Unless there are dramatic changes in the Universe, triangles will continue to have three sides, three angles, and operate according to some very simple rules.

The items in this section are intended to provide a foundation that allows us to understand the conditions of possibility for the discussion of things that can change, or will change. Some of these items may seem bizarre – they are included because of the extensive virtual horizon of media moving forward, this book is intended for classes that consider headsets, bodysuits, and brain-computer interfaces just as seriously, or likely more seriously, than they consider the design of a front page of the paper for the Dodgers winning the World Series.

Each sub-point in this section will identify something that is unlikely to change, some important basic information about it, and the practical consideration of how that unchanging property impacts your world.

2.1 The Electro-Magnetic Spectrum

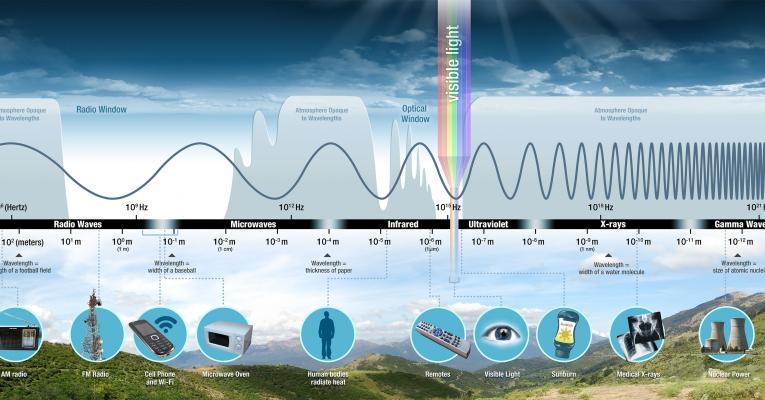

NASA (the National Aeronautics and Space Administration) provides this handy chart:

The spectrum extends all the way from the long waves of radio through the short waves of positron imaging and background radiation in the universe. When you screw the coaxial cable into your cable port for your television or modem you are attaching a metal conductor for a radio signal.

All electromagnetic radiation is similar, taking this block quote from NASA:

Electromagnetic radiation can be described in terms of a stream of mass-less particles, called photons, each traveling in a wave-like pattern at the speed of light. Each photon contains a certain amount of energy. The different types of radiation are defined by the amount of energy found in the photons. Radio waves have photons with low energies, microwave photons have a little more energy than radio waves, infrared photons have still more, then visible, ultraviolet, X-rays, and, the most energetic of all, gamma-rays.“[1]

Why do we care about this then? Almost all of our contemporary image media and network driven technologies depend on the spectrum. All optical media are spectrum dependent: light interacting with paint and screens both rely on the same underlying spectrum dynamics of color.

Does that mean that your microwave is dangerous?

No. Your microwave uses a faraday cage to contain its electromagnetic energy. The same cages are useful for computer security – a faraday bag is important for containing suspicious electronic devices, and faraday cages protect electrical equipment.[2] What is the magic here? A web of metallic bars or strands.

This will not change in your lifetime. The fundamental units of all systems will be somewhere on the spectrum.

2.1.1 Scarcity

Consider a car radio, as you drive away from a city the signal from your favorite vaporwave station becomes weaker and weaker as you move away until you can no longer hear it at all. Of course, such a hip station would use the FM method of encoding as it allows greater fidelity to the original (it sounds better). At that point, you might switch to an AM station from that same city which carries broadcasts of stand-up comedy. As you approach the next city you may find the same frequencies on which you enjoyed vaporwave and jokes carry jazz and sports. In any given place, there cannot be two transmissions on the same frequency. The waves cancel each other out: this is a key property of waves.

The principle of scarcity is so important that it is the basis of broadcasting law. In RTNDA v. Red Lion, the Supreme Court made clear that the scarcity of broadcast spectrum allowed the restraint of speech using radio.[3] Deciding what would be carried on a rivalrous channel over the air would be a matter of public concern. In the Tornillo decision, the Supreme Court found the opposite in the case of newspaper: unlike a radio station they are not rivalrous.[4] Economic, rather than electromagnetic, justifications for restriction run afoul of the first amendment.

This is also the basis of policy discussion in the context of net neutrality. Although at the upper end of utilization bandwidth through the internet may have a physical limit, for most intents and purposes it is a non-rivalrous resource. Advocates of net neutrality contend that such an important electromagnetic service should be considered under the same legal regime as telephone service: as a common carrier. Opponents contend that operators of internet systems should be allowed to recoup additional funds from heavy users of the infrastructure and that they may have a first amendment interest in editing the flow of information. More on this will appear in a later section as policy and network shaping technology are highly likely to change, what is unlikely to change is the rivalrous scarcity of bandwidth.

At this university, this course comes after a course on political economy and legal theory of new media, for those of you reading this without such a course this 2×2 matrix can be very helpful.

| Rivalrous | Non-Rivalrous | |

| Excludable | Private Goods: single use experiences or things. Food. | Club Goods: traditional cable television, concerts. |

| Non-Excludable | Common Goods: Goods that can be exhausted but are not easily controlled, open pasture land, oceans. | Public Goods: Ideas and other things that are not used up and are for everyone: ideas, air. |

2.1.2 Optics

Light sources emit many photons on many wavelengths in many directions. A bright light source could be more clearly organized by the use of a lens that would organize the light created by the source. The Fresnel Lens is a great example of this. The ridges of the lens allow the light passing through to be organized to flow in a coherent direction.[5] Light can also be focused with a conventional concave lens. The lens on your camera is condenses the light that reaches it into a coherent beam that lands on some kind of sensor or film. When you consider this in the context of the light being electro-magnetic energy, it makes sense why you should not take a picture of the sun. All of the energy that the sun emits is scattered, the camera (or your eye for that matter) uses a lens to focus energy. All sensors in cameras, to be addressed later in this section, are energy collectors.

Polarizing filters use very fine lines to block the flow of unorganized light to a source, this is useful as it can dramatically reduce glare. This is why polarizing sunglasses may not allow you to see your phone, and is how transparent glass 3D systems are able to separate channels for the viewer.

Light can also be split apart into discrete wavelengths. A prism reveals the colors within white light, while mass spectrometer allows you to see which colors are absorbed or reflected by a particular object. This has a number of implications for how we understand the design of cameras, displays, and paints.

There are two ways that we can understand primary colors: additive and subtractive. Most students in this course will be familiar with subtractive theories of color. These theories contend that with a certain number of basic colors (primaries) all other colors can be produced. Typically, these are presented as red, yellow, and blue or more technically as cyan, magenta, and yellow. The base off-set printing process uses CMYK (where K is black).[6] Tiny dots of these colors could then be made and printed in proximity, which is perceived as a field of color. These different inks would absorb some frequencies of light while reflecting others. This is the subtractive primary base, as more colors are added the reflection profile of the material will move toward black.

Additive primaries exist in light itself. These primaries are red, blue, and green. The absence of light is black, while the presence of all lights is white. Each of these theories is meaningful and correct, they simply describe different optical properties of different media as they interact with light.

2.2 World Systems

World systems theory sees the collection of countries, geographies, and cultures as an evolving system over time. This approach is helpful for our analysis because it views these large institutions as a continuously evolving system, rather than historically specific units.[7] Studies of the future of media in this state system would call for the evaluation of core-periphery distinction, the flow of materials between countries, shifting frameworks for the interpretation of events and flows.

The inclusion of world systems here is not intended as a conservative note, but one that calls for rigor. Some of the weakest predictions that have come through popular media studies in recent years are those that underestimate the staying power of the state as a form of human organization. We make states because they are useful for doing things that we want with people who may have common cause with us. Some of the hardest won lessons of the last few years have been that new media technologies do not “laugh” at powerful institutions as some authors supposed in the 1990s that they would, but that they are often twisted to support the status quo.[8]

It is also important not to limit our consideration here to the state: large corporations, transnational activist groups, publics/counter-publics, trans-state organizations, militaries, and terrorist groups just to name a few are actors in this system. They are not going away. Facebook will not replace the government. Trade wars, a rising threat at the time of this writing, prove the power of the state over the economy and technology and the power of technology and economy to shape the state. Government should always be in your consideration of the future.

2.2.1 Geography

There will continue to be scarce resources and geographic barriers to the movement of people and things.

As of the time of this writing, one element that may appear to be unlikely to change is the distribution of rare earths, such as neodymium, which is critical for building magnets. It is important to note that rare earths are not actually rare, they are only seen as rare because of the negative externalities of mining and the sure cost of sorting through huge amounts of soil.[9] The rare earths are the lanthanides, which are just above the exciting radioactive actinides section of your handy periodic table. The things we use these technologies for, like MOSFET transistors are highly unlikely to change. How will we find the political will to secure our necessary supplies of these materials in a responsible way?

The ways that the configuration of space on earth shapes possibility should not be understated, many discourses organize the understanding of space and time on the basis of the physical layout of mountains, lakes, oceans, and many other physical forms. Geographic forms are interposed into other political discourses which then become co-productive.[10] What does that mean? Mountains may not change, the ways that we talk about manipulating mountains will.

2.2.1.1 Undersea Cables

Former Alaska Senator Ted Stevens is frequently lambasted for his remark that:

And again, the Internet is not something that you just dump something on. It’s not a big truck. It’s a series of tubes.[11]

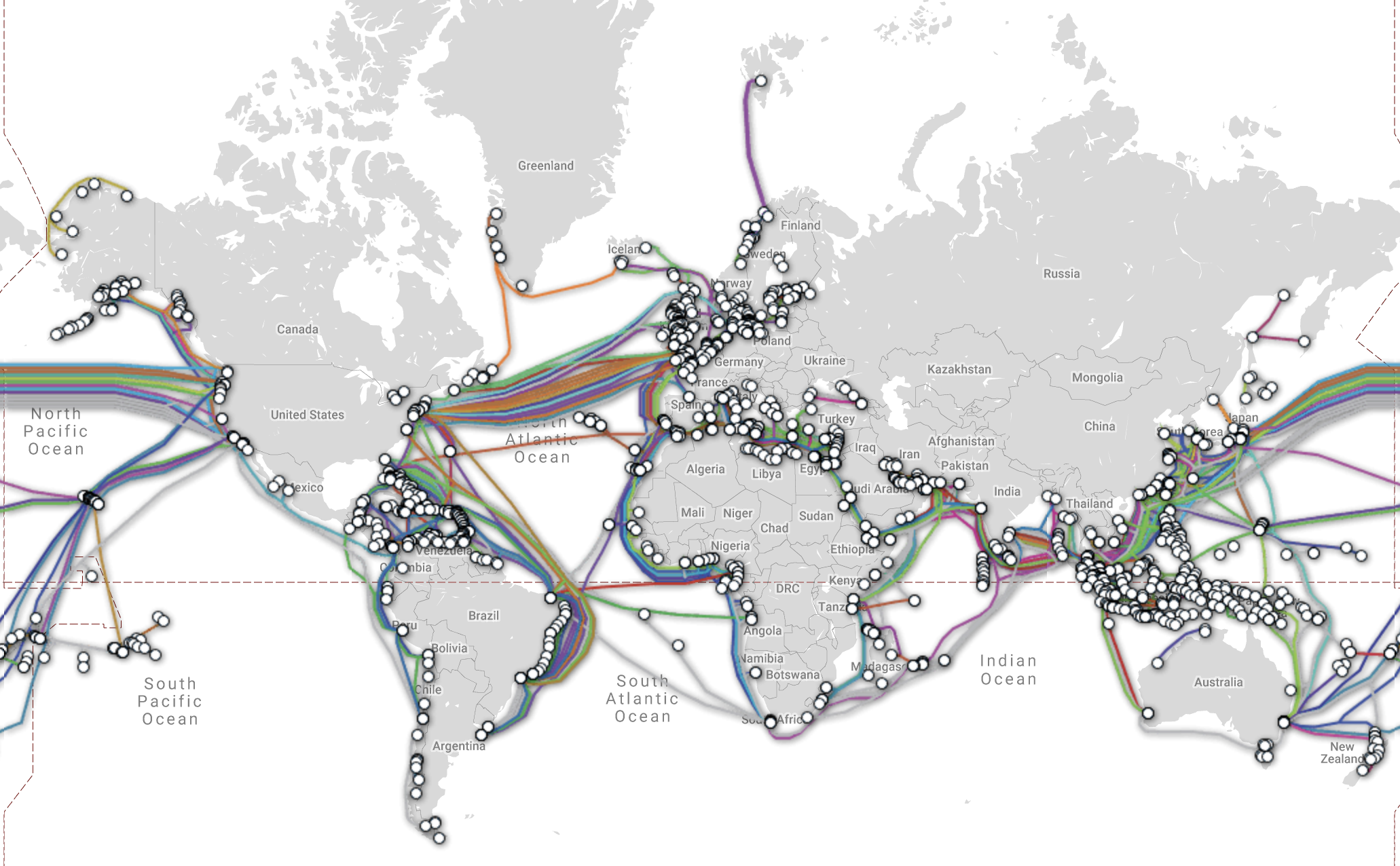

This was initially intended as an argument that the internet was rivalrous, but the deeper point is worth thinking about: the internet is a physical thing. Among the most important reminders of this is the undersea network of cables that make up the backbone of the internet.

Edward Snowden revealed in his 2013 document dump with wikileaks that the National Security agency had tapped into the cable landings of other countries. Consider this map:

There are a finite number of good points where cables would be connected between countries, sniffing at these key points would allow a state actor to see a great number of messages. With that information, they could see to their national defense or provide their industries an advantage over those in other countries. From the other side, such points present key weaknesses. Cable cutting subs would sever these links in the first moments of a war. In other conflicts, military use of public telecommunications infrastructure has been a critical vulnerability.

2.2.1.2 Satellites

If physical linkages are not possible one important way of linking distant areas involves routing the signal up and away from the surface of the planet. Satellites are already a key form of logistical media, they allow your navigation system to know where it is, and process television signals.

Compared with links to fiber optic or coaxial cables, satellite transmissions require more power, are slower, and more expensive.[12] At one point Facebook commissioned blimps that would fly and produce conductivity across the surface.[13] Access to a nation’s sovereign airspace is no small matter, and the risk the distant service might displace the development of local infrastructures is quite real. At the end of the day, the local spatial fix that could be created through control of the telecommunication infrastructure outweighed the idea of the quick, cheap, foreign access point.

Beyond cable cutting, among the first major acts of the next World War will include the use of anti-satellite weapons, either with ground based systems, other satellites as weapons. The world of ubiquitous satellite access is a result of a lack of major interstate conflict, not technical necessity or inevitability.

2.2.1.3 Other Transmitters

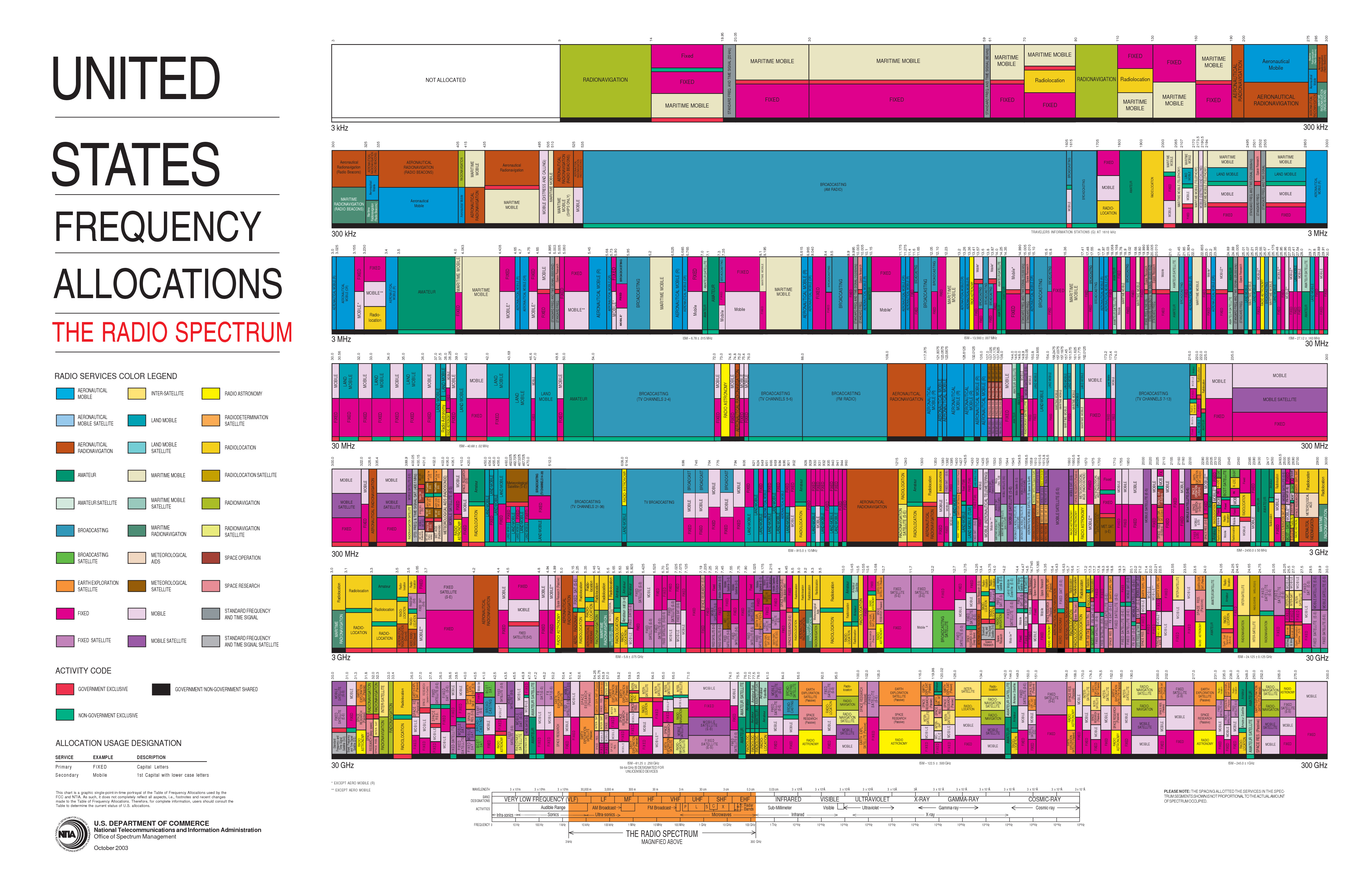

All linkages depend on some interaction with a transmitter. The spectrum is limited, with different bands assigned for different uses.

Radio transmitters and receivers, like those in your cell phone, allow you a great deal of access to information. Transmitters will continue to be critical infrastructure. Any method that does not involve transmitters, would involve shifts in the laws of physics or biology that are well beyond this book, or the society where people would read it.

2.3 Transistors

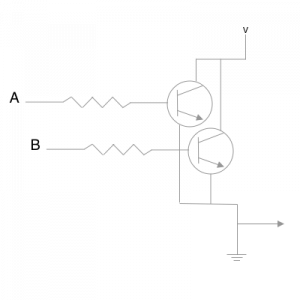

The digital revolution depends on the transistor – a semi-conductive device that allows the solid state encoding of logic gates (they can also be used as amplifiers). For the most part, the transistors we are interested use a field effect. Before we get into the discussion of operators, it is useful to think about the two kinds of transistors: P and N. In order to augment the electrical properties of the semiconductors already used in transistor, specific materials can be added that change those electrical responses.[14] In the case of P doping, the region in the middle of the potentially conductive zone is treated with a material that will further react when exposed to a proximal electrical field. The P transistor will then turn off an underlying flow. An N doped semiconductor will turn it on.

At first you might think that this is a fairly low level innovation, these seem to be simple switches that express binary logic. What is powerful about these systems is that they can be produced in massive volumes at incredibly low prices. Replacing expensive vacuum tubes, transistors made it possible to build many more processing systems than were possible before. Even better, transistor based processors were so affordable that general purpose processors could replace specially built electronics in many cases. Transistors make software possible.

The design of logical operators is highly unlikely to change. All computationally legible information can be represented using transistor states of A/B. These are described through truth tables.

The following are descriptions of key logic gates:

AND

| A | B | Output |

| 0 | 0 | 0 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

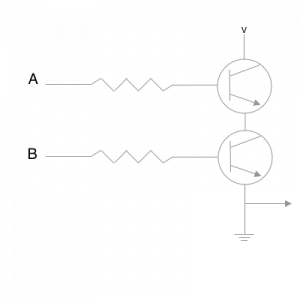

In terms of practical transistor design, an AND gate requires two switches aligned in a series. Only if both switches are on will the current flow.

*To read these circuit diagrams, you can see inbound voltages from v, A or B. In these diagrams I see the world as nMOS, meaning that switches are flipped on by A or B, the voltage is always present with v.

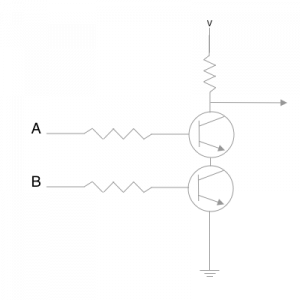

OR

| A | B | Output |

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 1 |

In terms of practical transistor design, the switches are parallel. If either switch is on the current will flow. The problem, is that we cannot easily distinguish between the values A and B. Either could be on.

As you can see, the operator OR is not particularly revealing, which leads to the gate XOR

| A | B | Output |

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

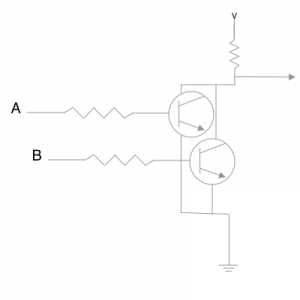

The principle of functional completeness, supposes that there are two gates that are functionally complete: NAND and NOR: all other gates can be constructed using either of these. NAND supposes that two inputs result in one…

| A | B | Output |

| 0 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

The transistor setup for the NAND gate is quite straight forward as the current would always be flowing across the active region of a P doped transistor. One of the trickier ideas here – how would a NAND gate produce a NOT gate? If both leads of a transistor were lead into a single input, the presence of that input would produce a 0 outbound signal. The leads of the transistor do not necessarily need to run to the same place.

The complement would be a NOR gate…

| A | B | Output |

| 0 | 0 | 1 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 0 |

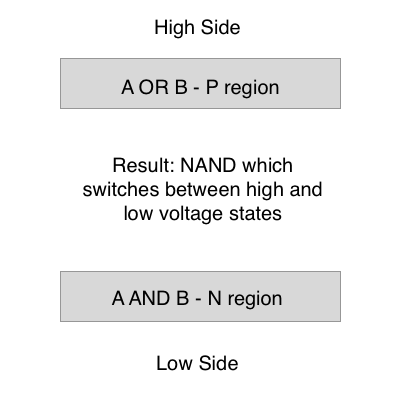

Notice the critical idea here: there are four transistors combined into a single circuit which includes both a NAND and a NOR, instead of the binary logic here being on/off the circuit encodes high/low. When both P type transistors block, the flow creating the NAND, the path for the NOR is opened.

Why is this such a powerful technology?

At first it might appear that the reduction of all information to binary might appear difficult and confusing. What is important to understand is that binary states rely on the same processing algorithms that you rely on every day. Consider binary addition, it relies on the same process that you use for adding any other numbers, the difference is that you carry the 1 whenever the sum is more than 1.

Notice that I am not required to carry until bit 3, where 1+1=0; carry the 1. In Bit 6 we see the next step where 1+1=0 carry the 1, but we have already carried a one, thus the result is one. Subtraction follows a similar process. On the level of the transistor, mathematical tasks become simple combinations of on and off.

Transistors and logic gates offer the brute force necessary for the simulation of any specific operation.

The underlying principle of semiconductivity is highly unlikely to change, the alternatives to existing semiconducting technologies in terms of semiconduction still rely on the Boolean logic of transistors, meaning that the core of the idea is likely here for the long haul. Beyond that, it is unlikely that quantum computers, spintronics or other such technologies can replace the signal amplification role of the transistor, after all, the transistor did not replace the vacuum tube.[15] Quantum computing is interesting, and could begin to supplant the transistor binary paradigm, but the technology is far further away than is generally acknowledged.[16] The period of time between the transistor and Facebook was over fifty years.

This should remind you of Gitelman and Pingree’s axiom: new media do not completely replace the old, they resituate and define the use of the others.[17] Moving forward we can use these ideas to understand other technologies and the possible limits of computation in understanding – the magical innovation of the digital is the possibility that all information could be quantized and processed through logic gates.

Summary:

- Transistors are durable, they have no moving parts.

- Moore’s Law: we are always finding ways to put transistors into a smaller space, at affordable prices.

- Uniform fields of transistors enable high-level abstractions. These abstractions are what we call software.

2.3.1 Heat

All circuits produce heat. Electricity is the movement of electrons and that physical motion really exists. Bitcoin miners struggle with adequate supplies of electricity, both for their systems and for their cooling. Integrated circuits include resistors, which intentionally deal with excess voltages as heat. Heat further increases resistance, which can cause other problems as well. Quantum computers, and high precision imaging transistors, must be kept cold. Dissipating heat is the essential task for design of new systems.

2.3.2 Abstractions

Many of our emerging technologies depend on augmenting layers of transistors. Understanding how those systems work and how they translate information into a meaningful form is absolutely essential for understanding the future. The transistor and the qubit mean little if there is no language to implement instructions on them. Even then, there is already vast computing power available. Aside from the calculation of np-hard problems, it is hard to see what new classes of operations will appear in this era. The most likely answer is that the kinds of abstractions that could be processed will go far further. This term will appear in another section, but it is useful to think of it now: the digital refers to the production of fast, useful abstractions.

2.4 Humans and Sensations

This section is not intended to defend the idea of humanism, but to say that the critical subject of this book is people. Those people have sensations. The questions of how those sensations can be produced and reproduced. In this section, we are concerned with understanding basic human physiological structures and the basic physics, chemistry, and biology of perception. Special attention will be paid to the idea of standardization, meaning the question of how we map different physical inputs into a sensation and what result may or may not be registered. This is a particularly powerful element of the analysis of the virtual as we must account for the ways that meaning can be actually produced.

Note that this is limited to a fairly banal conception of five senses. This section of this book is intended to concern that which is highly unlikely to change, so ideas like expanded senses and ambient awareness are in section three of this book.

2.4.1 Hearing

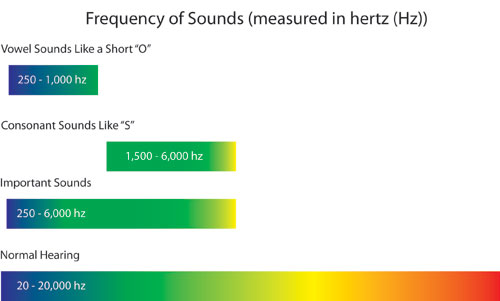

The sense of hearing is the result of the complex processing of vibrations, primarily, in the cochlea of the human ear. This typically responds to a frequency range 20-20,000 hertz.[18] Younger people may hear higher pitches than older people.

Sound as we know it is a longitudinal wave. The mechanics of a wave like this are slightly different than light waves, but can be described in similar terms. An energy source, like a speaker or a voice produces an energy wave that then travels through a medium to reach a reception point. Energy can move around this space and be reflected back at the source.

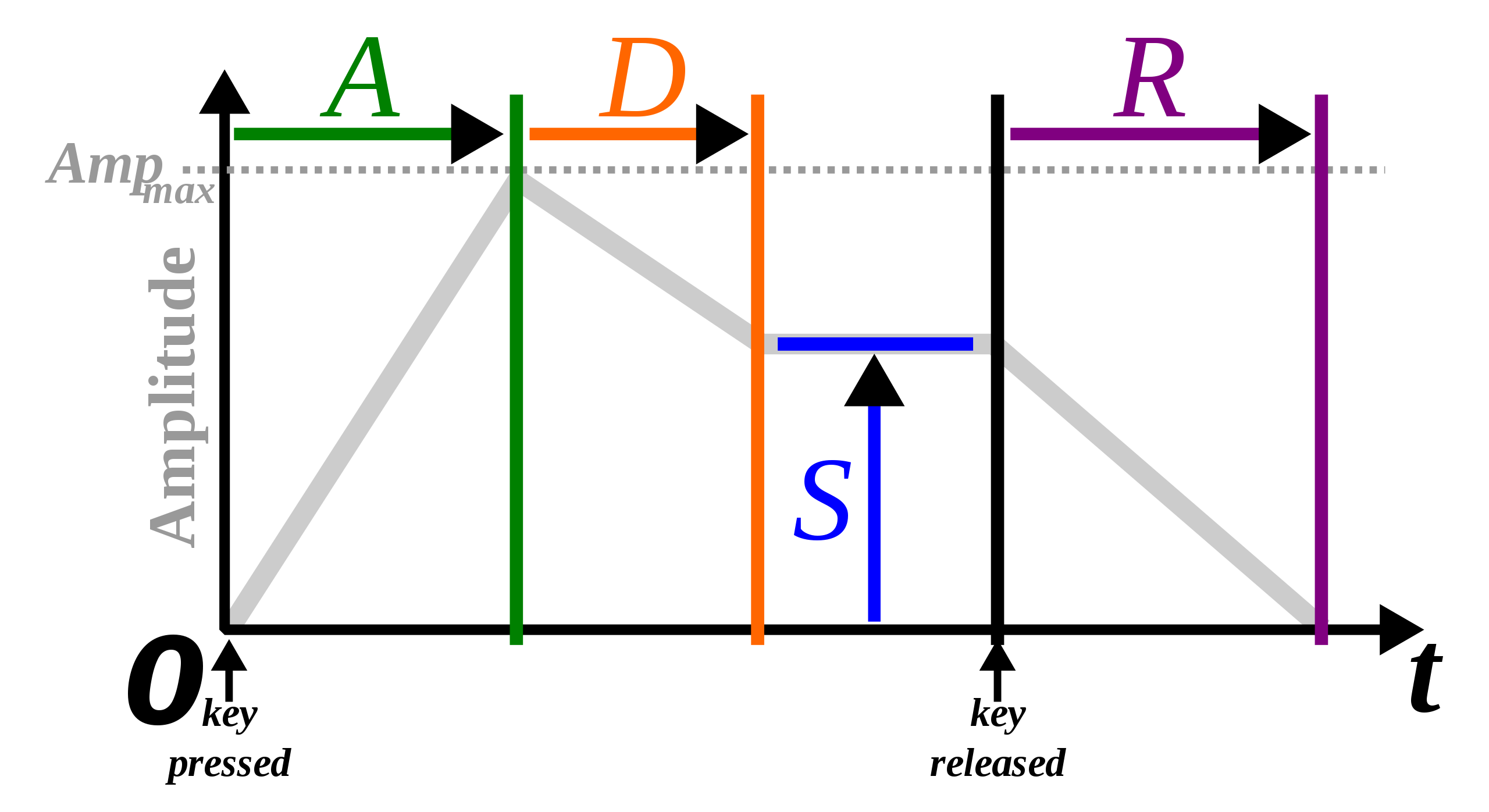

This is the acoustic envelope. Any sound has an attack, the initial moment when the sound is produced, a time where it is sustained, and the end where it decays and is released. This is an important idea for understanding both sound and sound processing. The envelope represents a sound as an energetic moment, within that moment the elements of the wave can be further manipulated. If an entire envelope is heard in a reflection with a complete attack, it is called an echo. When the wave interacts with the sustain of a prior envelope it is called reverb.

When different waves interact, they can form what is called a standing wave where the energy of the two is merged. It is also possible that a wave could cancel out the other if it is out of phase with the original.

There are two dimensions we should consider: the frequency of a sound and the energy level. The core note of the piano is Middle C with the A of a Viola at 440. Your instructor will likely use a frequency generator to produce some of these tones and harmonics. If they do not, you should be using a program like garage band or just playing around with the random pianos that seem to be around college dorms to think about these concepts.

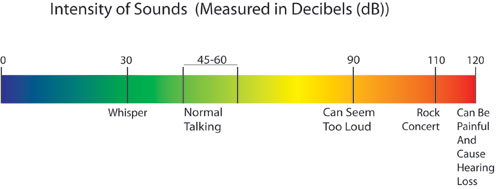

The energy of sound is expressed in decibels. This is a logarithmic scale meaning that an increase of ten decibels is one hundred times the energy level. Thus, if an employer were to break the 84 dB threshold for hearing protection in an environment by a few dB it is a massive increase in energy level.

Reflections may be adjusted. Sounds can be absorbed. An anechoic chamber uses large absorptive wedges to eliminate sound. Sound can also be diffused by these wedges. Contact with a surface with a great number of cleavages allows the wave to impact over time. This is critical. Once the wave is broken into many smaller reflections in different time frames the total sound is dramatically reduced.

Reflection can be used strategically in designing spaces that would be advantageous for superior reflection, like a lecture hall. Among the reasons why people enjoy singing in the shower is the propensity for the small space with parallel walls to form standing waves, further the humid air of shower has greater impulse than dry air in the world. This is an important point: sound waves propagate differently depending on the medium.

Objects producing sounds are also limited by their resonance frequencies. If one plucks a string, the wave length is constrained by the total length of the string. Of course, harmonics may operate on different intervals, but the fundamental frequency of the sound will remain the same.

A barbershop quartet is a fascinating study in harmonics. If you listen to a group with a few singers you can hear more tones than should be present. Why? Because harmonics form between the frequencies where the singers are.

How is this translated into the brain? The human takes in sound through the cochlea, either received as vibration of the creature directly, or through a system of bones in the ear which is then processed in the inner ear by the cochlea. Shera, Guinan, Oxenham describe the role of the inner ear:

The mammalian cochlea acts as an acoustic prism, mechanically separating the frequency components of sound so that they stimulate different populations of sensory cells. As a consequence of this frequency separation, or filtering, each sensory cell within the cochlea responds preferentially to sound energy within a limited frequency range. In its role as a frequency analyzer, the cochlea has been likened to a bank of overlapping bandpass filters, often referred to as “cochlear filters.” The frequency tuning of these filters plays a critical role in our ability to distinguish and perceptually segregate different sounds. For instance, hearing loss is often accompanied by a degradation in cochlear tuning, or a broadening of the cochlear filters. Although quiet sounds can be restored to audibility with appropriate hearing-aid amplification, the loss of cochlear tuning leads to pronounced, and as yet largely uncorrectable, deficits in the ability of hearing-impaired listeners to extract meaningful sounds from background noise.[19]

The research from which this quote was extracted concerns the understanding of the critical band of the cochlea, where the ear may actually interpret signal. This would play a critical role in understanding what kinds of filters and sound modifications could be conducted. It would make little sense to increase the volume of what could not be heard in the first place.

Much of what we would understand to be semantically meaningful activity takes place in the relatively low end of this band. Voices can be understood by slicing out 250-1000 hertz. Once activated a relatively small number of neurons interact with the hair cells, indicating a tone at a particular point. Sound perception is limited to the particular bands where the hairs are capable of processing a vibration.

Sound is standardized through the frequency, envelope, and energy level. Special forms of sound organization that take place over time and through the organization of tones is called music. The schemes by which we organize tones are highly likely to change.

2.4.2 Vision

Vision, in humans, relies on the retina to interpret light focused by the lens. This information is then relayed through optic nerve to the brain, it is processed by a number of regions. Although the exact process by which recognition of particular forms is still unknown, the brain appears to first process edges and then to work among multiple sets of edges to form an image.[20]

On the level of the retina, there are two distinct receptors: rods and cones. Rods are distributed outside the central fovea and have little role in color, they are highly sensitive.[21] Cones are found in the foveal region.

Cones are far more responsive, detecting color. There are three kinds of cones: S, M, and L which appear to be associated with different perceptions.

Rod sensitivity peaks between S and M. Each cone cell has a single line to the optic nerve, rods[22] It is important to note that there are far more L and M cones than S.[23] The receptors of the retina are thus all receiving some particular color, it is not that the cones see color while the rods do not. The resolution of the retina is roughly 150,000 cones per square millimeter.[24] By contrast, the most sensitive commercially available film cameras have a pixel density of roughly 42,000 per square millimeter. Most display systems operate at much lower resolutions.

Beyond resolution, the eye and the sensor have different refresh rates. Historically the normal flicker detection threshold for the eye was understood to be around 70 Hz (just above the refresh rates of an analog television), although research has indicated that detection of flicker for light with a spatial edge can be perceived at much higher frequencies [25]

The underlying structures of artistic composition make sense in the context of the eye relying on a combination of edge and color detection, from line through variety the basic features of art work with these perception mechanisms. At the same time, there are surely codes that interplay with the nature of perception itself. More on this in the next section.

Depth Perception

Most depth cues are mono-optical.[26]

| Cue | Description |

| Occlusion | One thing is in front of another |

| Parallax | Moving your head allows you to see a new image |

| Size | Things in the distance look small |

| Linear Perspective | Lines appear to converge toward the horizon |

| Texture | At a distance textures are not visible |

| Atmosphere | Things in the distance are hazy |

| Shadows | Light sources produce indications of depth |

| Convergence | The eyes tend to converge on an image at a moderate distance |

| Stereopsis (Binocular Convergence) | Each eye sees a different image |

As you may have noticed, parallax (mono-optical) depends on the motion of the head. This demonstrates a level of sensory integration, with touch, as the proprioception of the head is involved.[27] Modally, the senses are more integrated than disintegrated.

2.4.2.1 Color

Color is not changing in as much as it will not have a dispositive resolution. What does this mean? Color is ultimately a combination of an object, light, and a perceiver. Where color resides between these sites is an old philosophical debate. What is especially important to understand about color is that it is not separate from vision itself. Commonly in communication and art programs color is taught as something secondary and less than line or form. As you have already read the idea of color and non-color receptors is dubious – rods perceive a sort of blue. This lack of color is known as the coloring book hypothesis: the brain produces a world of outlines which are then colored in. As Chiriuta describes, the research on vision does not bear out this theory: colors are processed simultaneously in edge detection.[28]

At the same time, color dysfunction is extremely common among males. It is best practice to NOT use color as the primary means for encoding information, as many people are unable to detect certain hue differences. Changes on an evolutionary scale are clearly among those in the less likely category for this book.

Attempts at standardizing color hinge on reproduction. Although Pantone is generally known for fun research on color trends, the real products are specialty inks that can be used across product classes. Pantone produces educational materials that can help understand what a particular color is, at least in as much as it can be recreated:

2.4.3 Touch

To begin with there are a few major categories of touch perception: mechanical (pressures and vibrations), temperature, pain, and proprioception (dimensions of the body at present).

In terms of processing, much of the work of touch sensation is accomplished by ganglia, with the majority of touch neural structure devoted to the perceptions of pain and heat.[29] This paragraph is directly informed by Abraira and Ginty’s review for the Journal Neuron. Of the neurons that respond to touch sensations, there are low and high threshold variants. These perceptions are thought to be mapped to the different conduction potentials of the neurons, such as their myelination (being covered with a protective insulator). Hair also plays an important role – hairs are physical mechanisms that produce sensation and depending on hair type. Receptors also display different adaptation rates, meaning that they might continue firing if repeatedly stimulated, these are likely the key to textures. Fast adapting fibers have far more intense reactions. The uses of such fibers would be clear: sometimes you need a really strong touch to tell you to move your arm, but you don’t want that signal repeated too often. Yet another type of receptors, including Pacinian Corpuscles: these are important as they are receptors that can meaningfully transmit vibration frequency. The highest density of these receptors is found in the finger tips. As Abraria and Ginty note in their consideration of the integrative theory of touch:

Our skin, the largest sensory organ that we possess, is well adapted for size, shape, weight, movement, and texture discrimination, and with an estimated 17,000 mechanoreceptors, the human hand, for example, rivals the eye in terms of sensitivity.

They theorize that the Dorsal Horn of the spinal cord is akin to the retina in the perception of touch, serving as an intermediate rendering point for incoming touch information, although some touch information may pass through by another channel.

The processing of touch perception is complex. Berger and Gonzalez-Franco hinge their research on the idea of the “cutaneous rabbit,” an illusion in touch where taps at two points produce a feeling that the touch moved between those points.[30] They use this argument to lead to an important debate: is touch perceived as a connection between particular points on the skin and parts of the brain; or is touch something far more tied into cognitive processes beyond the mapping of the skin? In their experiments, virtual reality equipment (oculus rift) was used to produce an “out-of-body” touch illusion. What is important about their work, is that they were able to produce out-of-body touch illusions without corresponding visual stimuli although enhanced by that additional information. Sensation of touch is deeply tied to other cognitive features, it is not simply a push-button effect on the skin. This line of research can also be explored through the work on affect and touch: in the absence of conscious-reflection touching can produce pro-social outcomes.[31]

As much as experiments like the cutaneous rabbit prove, the problem of haptic translation is difficult. The limits of the interpretation of touch as haptics will be discussed at greater length in the next section, but the question becomes, can we actually deal with the sure number of sensations that would be needed to replicate the world as we know it? Does a vibrating glove match the grain of velvet?

A further consideration is the role of the mapping of the body as it relates to the position of limbs, organs, and the space around the self. Terekov and O’Regan have proposed a model of the perception of space where an agent secures an awareness of space as an unchanging medium, rigid displacements, and relative position.[32] This offers an important insight about the production of spatial awareness both for creatures and AI systems: the foundation of the world can take place without a concept of space itself. In the context of human perception this becomes something of a sixth sense of body position, movement, and force.[33] Tuthill and Azim argue the perception of the body in space then is critical for stability, protection, and locomotion.[34] Most animal motor functions depend on the feedback loop of the perceptual system, with a few notable deceptions. Generally, the feedback information provided by various species proprioception systems are similar, suggesting a common origin.

Heat and pain are less relevant. There are clear media applications for the use of hot and cold, the cases where pain responses would make sense are limited. An example would be the Star Wars experience at Disney World which makes use of haptic feedback vests to help guests perceive the impact of laser hits.[35] Of course this is not true pain, just a gentle tap.

2.4.4 Taste

Taste is a combination of sensations. Some of what we understand as taste is smell, blended with texture, sight, and sound. In terms of the specific sensory differentiation point, the key to taste is the taste bud, which contains specific chemical receptors.

Roper and Chaudhari report in their review of the literature for Nature Reviews: Neuroscience that each taste bud contains three distinct types of receptor cells: Type 1 unknown function with a highly heterogeneous makeup (50%), Type 2 larger and spherical detecting sugars, amino acids, and bitter compounds (33%), Type 3 with the mechanism for the detection of sour distributed in patches around the mouth (2-20%).[36] The taste buds are distributed, along with touch and temperature receptors, in various structures around the mouth called papillae.[37] The review contains more specific information about the synaptic linkages for each type of cell, this is beyond the scope of our analysis for this book.

First, the simplistic four flavor model is not supported by the research. The receptors of the taste buds can recognize many different compounds. Consider the amino acid lysine: it is nearly 70% as sweet as sugar, and one of the primary flavor elements of pork. Second, the idea of regions of the tongue being associated with particular flavors does not hold. Taste receptors are found in many places in the body, which makes sense as they are sophisticated chemical detectors and there are many chemical detection tasks that would seem to be key to human life.

The total number of potential flavors ranges between five: salty, sweet, sour, bitter, umami (glutamates) and twelve. Any number of chemicals can be detected by the taste buds described in the research. Some receptors detect carbon dioxide.[38] Spicy flavors are detected by the VR1 heat receptor (which helps keep you from burning yourself).[39] Thus the common-sense retort that there is no taste of spicy, it is just hot. But hold on – not only are the receptors for hot in your mouth (and not on your forearms or lower back), a specific receptor for heat, and another receptor for the perception of coolness that runs along same set of nerves.[40] Why would flavor be limited to one of the sets of chemical receptors tied to nerves that are enfolded into perception? There also seem to be receptors for calcium, zinc, maltodextrin, histidine (linked to heartiness or kokumi), glycerol sensation may hinge on chain length.[41] It is still unclear how the sense of salty works, yet we know from practical experience that salt can decrease the perception of bitter, and almost everyone enjoys a salty snack.

The hinge of this question is not biochemical, but phenomenological, how do we know when a combination of chemical interactions in the taste bud become an independent flavor? Andrew Smith writing for a New York Times blog noted that flavor scientist questioned how many different flavors should be recognized due to the lack of a firm basis for the idea of flavor.[42] More importantly, the body responds in many ways to taste alone, without actually swallowing the food. This is the challenge then for the role of flavor itself – how do we create a meaningful set of classifiers for such a robust and multidimensional experience? Is it enough to say sour when there are so many other sensations and descriptions? Is the ethnological (wine) solution adequate where experiences are described in a series of other ontologically separate terms? Or does the attempt to encircle the description of a flavor become an endless hermeneutic game?

In a review of the commonalties of mammalian taste, Yamolinsky, Zucker and Ryba note that insects and non-insects have distinct neurological structures for taste.[43] On the other hand, despite extensive differences, insects and mammals seem to have reactions that sort tastes into a somewhat similar framework – things that keep you alive (sugars and salts) and things that poison you (bitters). Innate flavors are present, at least at the start, this is not to say that people may not enjoy other flavors that they first found overwhelming.

Standardization is quite difficult. I learned this working as a short-order cook in college, when many of my classmates who were from Nepal found the food of the North Dakota region to be entirely too bland. They described the experience of eating foods at the extreme limit of spicy as containing a different world of flavors that were not present in other cuisines, which is an idea supported in the spicy adaptation literature.[44] The pH of your saliva can change how sweet something tastes.[45] Absent the ability to truly standardize flavor, or even provide flavors that would not seem injurious to some audience members, it seems unlikely that any meaningful standard for human flavor perception could be created. If such a technology were possible, the implications for human health and industry would be profound.

2.4.5 Smell

Olfactory responses pose a number of fascinating challenges for future media technology. Scents are difficult to reproduce, requiring particular chemistry and delivery. Like other senses described in this book smells are culturally specific. Individual reactions in the olfactory system with chemicals come together to form a coherence experience of an odor. There are cultures devoted to a return to a more “natural” way of living that object to the low odor presentation of many Americans. There are foods that are so offensive that they require active policing, like durian. Functional MRI research confirms that exposure to personally relevant aromas is tied to increased activity in the amygdala.[46] Research on smell remains woefully behind research on vision and hearing.[47] The lack of research on smell even allows organizations and companies to take a lead role in education.

Critical to the operation of the olfactory bulb is the flow of molecules over roughly four-hundred receptors.[48] Dr. Thomas Cleleand describes the process of learning smells thusly:

What we think that the first couple of layers of the olfactory system do is to build odors and define their sort of fuzzy boundaries,” Cleland continues. “You get this messy input, and the perceptual system in your brain tries to match it with what you know already, and based on what you expect the smell to be. The system will suggest that the smell is X and will deliver inhibition back, making it more like X to see if it works. Then we think there are a few loops where it cleans up the signal to say, ‘Yes, we’re confident it’s X.’

The future of smell research involves building massive processing models that might accurately model raw number of connections that could be made between different molecules and the bulb and the processes of memory that then encode the experience if encountering a new smell. It is also clear that this varies from taste or touch as it exists in even greater combinations an in a recirculating cybernetic movement toward sensation. Unlike the media of the instant, smell develops with an extended temporality.

There are many places that require careful olfactory planning. Alfred Taubman, an important developer of shopping centers, was careful to avoid errant wafts of scents from food courts into unwelcome places. Stores looking to build a unique brand, particularly those targeting young people, have been known to heavily perfume their entries. Disney World uses pipes to distribute the aroma of cookies in relevant places.

Expert practices in olfactory development include various approaches to tasting particular products. Meister, the coffee columnist for Serious Eats, reports that coffee tasters smell for enzymatic issues, sugar caramelization (mallard reaction), and dry distillation.[49] Each of these flavors has many subexpressions that circle in on a combination of chemicals and relationships that make the smell meaningful.

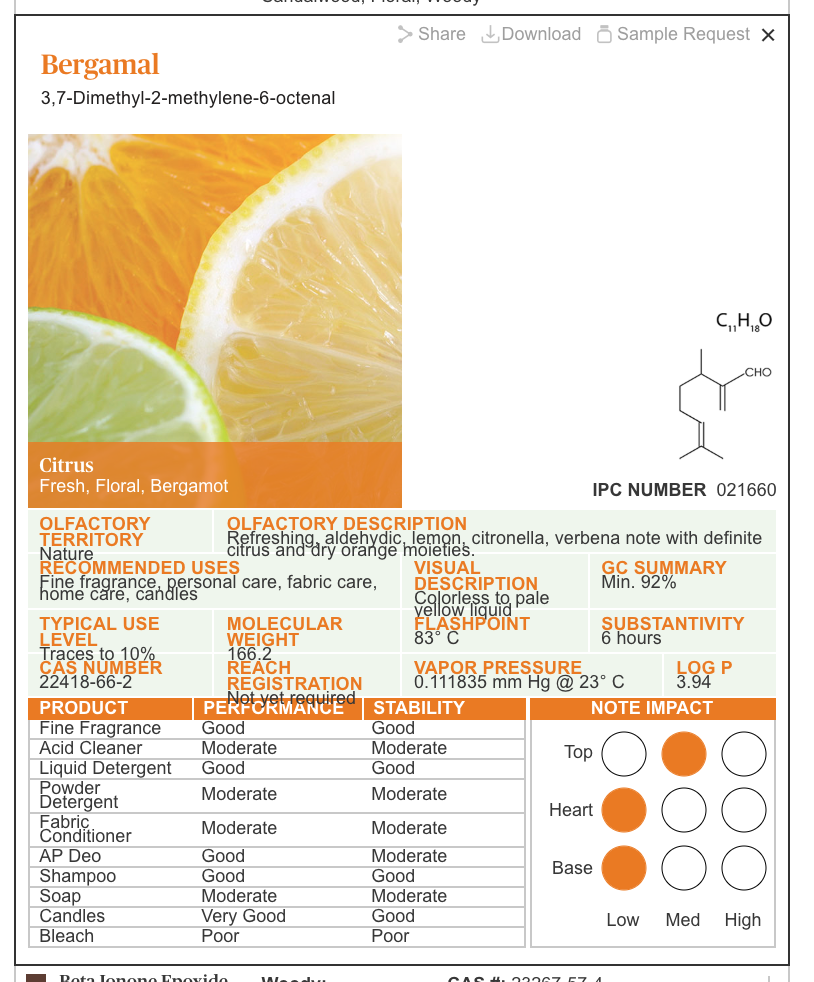

The standardization of olfactory experience is perhaps the most difficult. A more formal descriptive language comes from the IFF database, which provides an index of olfactory information much akin to Pantones.[50]

Notice the information provided. An olfactory description, which makes reference to other olfactory experiences, and use cases. What might be most important is the concept of chemical stability. Unlike a sound or an image, there are concerns that a distributed scent could ignite, levels of use, density, and beyond.

Benson Munyan, a professor of somatics at the University of Central Florida, has demonstrated that the use of olfactory cues can increase immersion in an experience, the logistics of using these devices are challenging as errant scents linger.[51] Commercial approaches to smell offer far fewer scents. The initial challenges may appear to come in the delivery of compounds (tubes, stickers, wafters) but on a deeper level there is no unifying substance for the production of different aromas. In the context of vision, there is a limited spectrum of light that the eye can process. Sounds are vibrations in a particular band processed by verily specific elements in the cochlea. In both of these cases there is a unifying form of energy and spectrum. Olfactory response involves a much wider variety of media and receptors.

In a world where a basic chemical synthesis method for olfactory experiences remains elusive, and it likely will be, experience design in these media will remain tied to particular places and to particular chemical compounds that might be strategically released. Although the IFF database provides the beginning of a theory of olfactory information, it is important to recall the reasons for the existence of Pantones in the first place. All color mixing methods have weaknesses in their underlying gamut. All reductive methods for the production of stimuli are problematic by their nature. Until micro-synthetic chemistry systems are available olfactory virtual reality will not exist. The underlie revolution in a chemistry plant on a card will fundamentally change the world. It is not a matter of all the chemicals that we might want to smell for fun, but a system capable of producing such a selection of smells could produce any number of industrial chemicals and drugs on a micro-on-demand basis.

2.5 Desire

People want.

This is the shortest and perhaps most important entry in this section. Bertrand Russell argued in his Nobel Prize Lecture that the problem of the human condition is desire. In the first instance the desire for the things that make survival possible. In the second instance, when survival was assured, desires for power and prestige. Power, for Russell, was almost always a violent drive, as that which would allow one to make others do what they would not, is troubling. Friedrich Nietzsche’s Genealogy of Morals, documents the shift in axiology where even basic concepts of right and wrong are continually recirculated within the event horizon of desire.[52] Placing the symbolic codes by which we evaluate right and wrong as secondary to desire is a provocative and important move to this day

People initially present something that they want, this want could be satisfied, but often the chase for the thing becomes more satisfying than the thing itself.[53] Desire is unquenchable. Hannah Arendt supposed that the desire for meaning could be, in the highest form, expressed through creative action. Natality does not escape desire, but for Arendt it is a desire to make new that is the highest point of the human condition, actions that are often confused with work or labor.[54] For Russell, managing excess desire is the key, to resolve his diagnosis that war is a function of a desire for excitement he proposed:

I think every big town should contain artificial waterfalls that people could descend in very fragile canoes, and they should contain bathing pools full of mechanical sharks. Any person found advocating a preventive war should be condemned to two hours a day with these ingenious monsters. More seriously, pains should be taken to provide constructive outlets for the love of excitement. Nothing in the world is more exciting than a moment of sudden discovery or invention, and many more people are capable of experiencing such moments than is sometimes thought.[55]

Desire will not change.

- Electromagnetic Spectrum - Introduction,” accessed October 5, 2018, https://imagine.gsfc.nasa.gov/science/toolbox/emspectrum1.html. ↵

- Eamono Doherty, “The Need for a Faraday Bag,” Forensic Magazine, February 21, 2014, https://www.forensicmag.com/article/2014/02/need-faraday-bag. ↵

- Red Lion Broadcasting Co., Inc. v. FCC, 395 US 367 (1969). ↵

- “Miami Herald Publishing Company v. Tornillo,” Oyez, accessed October 5, 2018, https://www.oyez.org/cases/1973/73-797. ↵

- “Advantages of Fresnel Lenses | Edmund Optics,” accessed October 5, 2018, https://www.edmundoptics.com/resources/application-notes/optics/advantages-of-fresnel-lenses/. ↵

- “Color Systems - RGB & CMYK,” accessed October 5, 2018, https://www.colormatters.com/color-and-design/color-systems-rgb-and-cmyk. ↵

- Immanuel Maurice Wallerstein, World-Systems Analysis: An Introduction(Duke University Press, 2004). ↵

- Larry Downes and Chunka Mui, Unleashing the Killer App: Digital Strategies for Market Dominance(Harvard Business Press, 2000). ↵

- Sarah Zielinski, “Rare Earth Elements Not Rare, Just Playing Hard to Get,” Smithsonian, accessed October 5, 2018, https://www.smithsonianmag.com/science-nature/rare-earth-elements-not-rare-just-playing-hard-to-get-38812856/. ↵

- Gearóid Ó Tuathail and Gerard Toal, Critical Geopolitics: The Politics of Writing Global Space(U of Minnesota Press, 1996). ↵

- Evan Dashevsky, “A Remembrance and Defense of Ted Stevens’ ‘Series of Tubes,’” PCMAG, June 5, 2014, https://www.pcmag.com/article2/0,2817,2458760,00.asp. ↵

- Broadb et al., “FCC Concludes Satellite Internet Is Good Enough for Rural Broadband,” Broadband Now(blog), February 16, 2018, https://broadbandnow.com/report/satellite-internet-good-enough-rural-broadband/. ↵

- Daniel Terdiman and Daniel Terdiman, “This Blimp Startup Is Taking on Google’s and Facebook’s Flying Internet Projects,” Fast Company, August 14, 2017, https://www.fastcompany.com/40453521/this-blimp-startup-is-taking-on-googles-and-facebooks-flying-internet-projects. ↵

- “Doped Semiconductors,” accessed October 5, 2018, http://hyperphysics.phy-astr.gsu.edu/hbase/Solids/dope.html. ↵

- “Introduction to Spintronics,” accessed October 5, 2018, https://www.physics.umd.edu/rgroups/spin/intro.html. ↵

- Larry Greenemeier, “How Close Are We--Really--to Building a Quantum Computer?,” Scientific American, accessed October 5, 2018, https://www.scientificamerican.com/article/how-close-are-we-really-to-building-a-quantum-computer/. ↵

- Lisa Gitelman and Geoffrey Pingree, “What’s New About New Media?,” in New Media 1740-1915 (Cambridge: MIT Press, 2004), xi–xxiii. ↵

- “Extended High Frequency Online Hearing Test | 8-22 KHz,” accessed October 5, 2018, https://www.audiocheck.net/audiotests_frequencycheckhigh.php. ↵

- Christopher A. Shera, John J. Guinan, and Andrew J. Oxenham, “Revised Estimates of Human Cochlear Tuning from Otoacoustic and Behavioral Measurements,” Proceedings of the National Academy of Sciences of the United States of America 99, no. 5 (March 5, 2002): 3318–23, https://doi.org/10.1073/pnas.032675099. ↵

- “How the Brain Recognizes What the Eye Sees - Salk Institute for Biological Studies,” accessed October 5, 2018, https://www.salk.edu/news-release/brain-recognizes-eye-sees/. ↵

- “Rods & Cones,” accessed October 5, 2018, http://www.cis.rit.edu/people/faculty/montag/vandplite/pages/chap_9/ch9p1.html. ↵

- Dale Purves et al., “Cones and Color Vision,” Neuroscience. 2nd Edition, 2001, https://www.ncbi.nlm.nih.gov/books/NBK11059/. ↵

- “Rods & Cones.” ↵

- Ibid. ↵

- James Davis, Yi-Hsuan Hsieh, and Hung-Chi Lee, “Humans Perceive Flicker Artifacts at 500 Hz,” Scientific Reports 5 (February 3, 2015): 7861, https://doi.org/10.1038/srep07861. ↵

- “Depth Cues in the Human Visual System,” accessed October 5, 2018, https://www.hitl.washington.edu/projects/knowledge_base/virtual-worlds/EVE/III.A.1.c.DepthCues.html. ↵

- Vito Pettorossi and Marco Schieppati, “Neck Proprioception Shapes Body Orientation and Perception of Motion,” Frontiers in Human Neuroscience, November 2014, https://www.frontiersin.org/articles/10.3389/fnhum.2014.00895/full. ↵

- M Chiriuta, Outside Color: Perceptual Science and the Puzzle of Color in Philosophy (Cambridge: The MIT Press, 2015). ↵

- Victoria E. Abraira and David D. Ginty, “The Sensory Neurons of Touch,” Neuron 79, no. 4 (August 21, 2013): 618–39, https://doi.org/10.1016/j.neuron.2013.07.051. ↵

- Christopher C. Berger and Mar Gonzalez-Franco, “Expanding the Sense of Touch Outside the Body,” in Proceedings of the 15th ACM Symposium on Applied Perception - SAP ’18 (the 15th ACM Symposium, Vancouver, British Columbia, Canada: ACM Press, 2018), 1–9, https://doi.org/10.1145/3225153.3225172. ↵

- Annett Schirmer et al., “Squeeze Me, but Don’t Tease Me: Human and Mechanical Touch Enhance Visual Attention and Emotion Discrimination,” Social Neuroscience 6 (June 1, 2011): 219–30, https://doi.org/10.1080/17470919.2010.507958. ↵

- Alexander V. Terekhov and J. Kevin O’Regan, “Space as an Invention of Active Agents,” Frontiers in Robotics and AI 3 (2016), https://doi.org/10.3389/frobt.2016.00004. ↵

- Joshua Klein et al., “Perception of Arm Position in Three-Dimensional Space,” Frontiers in Human Neuroscience 12 (August 21, 2018), https://doi.org/10.3389/fnhum.2018.00331. ↵

- John C. Tuthill and Eiman Azim, “Proprioception,” Current Biology 28, no. 5 (March 5, 2018): R194–203, https://doi.org/10.1016/j.cub.2018.01.064. ↵

- Anthony Levine, “Star Wars Virtual Reality at The Void in Disney World and Disneyland,” USA TODAY, May 22, 2018, https://www.usatoday.com/story/travel/experience/america/theme-parks/2018/05/22/star-wars-secrets-empire-void-virtual-reality/629435002/. ↵

- Stephen D. Roper and Nirupa Chaudhari, “Taste Buds: Cells, Signals and Synapses,” Nature Reviews. Neuroscience 18, no. 8 (August 2017): 485–97, https://doi.org/10.1038/nrn.2017.68. ↵

- “How Does Our Sense of Taste Work? - National Library of Medicine - PubMed Health,” accessed October 5, 2018, https://www.ncbi.nlm.nih.gov/pubmedhealth/PMH0072592/. ↵

- “Scientists Discover Protein Receptor for Carbonation Taste,” National Institutes of Health (NIH), September 27, 2015, https://www.nih.gov/news-events/news-releases/scientists-discover-protein-receptor-carbonation-taste. ↵

- James Gorman, “A Perk of Our Evolution: Pleasure in Pain of Chilies,” The New York Times, September 20, 2010, sec. Science, https://www.nytimes.com/2010/09/21/science/21peppers.html. ↵

- “TRPM8: The Cold and Menthol Receptor - TRP Ion Channel Function in Sensory Transduction and Cellular Signaling Cascades - NCBI Bookshelf,” accessed October 5, 2018, https://www.ncbi.nlm.nih.gov/books/NBK5238/. ↵

- Kokumi and fatty acid citations follow, the general point is that receptors are present for many chemicals. Lisa Bramen, “The Kokumi Sensation,” Smithsonian, accessed October 5, 2018, https://www.smithsonianmag.com/arts-culture/the-kokumi-sensation-78634272/; R. D. Mattes, “Oral Detection of Short-, Medium-, and Long-Chain Free Fatty Acids in Humans,” Chemical Senses 34, no. 2 (September 15, 2008): 145–50, https://doi.org/10.1093/chemse/bjn072. ↵

- Peter Andrey Smith, “Beyond Salty and Sweet: A Budding Club of Tastes,” Well (blog), July 21, 2014, https://well.blogs.nytimes.com/2014/07/21/a-budding-club-of-tastes/. ↵

- “Common Sense about Taste: From Mammals to Insects - ScienceDirect,” accessed October 6, 2018, https://www.sciencedirect.com/science/article/pii/S0092867409012495. ↵

- Agneeta Thacker, “FYI: Are People Born With A Tolerance For Spicy Food?,” Popular Science, June 10, 2013, https://www.popsci.com/science/article/2013-06/fyi-are-people-born-tolerance-spicy-food. ↵

- Ken-ichi Aoyama et al., “Saliva PH Affects the Sweetness Sense,” Nutrition 35 (March 1, 2017): 51–55, https://doi.org/10.1016/j.nut.2016.10.018. ↵

- Rachel S. Herz et al., “Neuroimaging Evidence for the Emotional Potency of Odor-Evoked Memory,” Neuropsychologia 42, no. 3 (2004): 371–78. ↵

- Gordon M. Shepherd, “New Perspectives on Olfactory Processing and Human Smell,” in The Neurobiology of Olfaction, ed. Anna Menini, Frontiers in Neuroscience (Boca Raton (FL): CRC Press/Taylor & Francis, 2010), http://www.ncbi.nlm.nih.gov/books/NBK55977/. ↵

- “Learning, Memory, and the Sense of Smell,” Text, Cornell Research, May 25, 2016, https://research.cornell.edu/news-features/learning-memory-and-sense-smell. ↵

- Serious Eats, “Advanced Coffee Tasting: What Your Coffee Smells Like,” accessed October 6, 2018, https://drinks.seriouseats.com/2012/04/coffee-cupping-aroma-what-coffee-smells-like.html. ↵

- International Flavors and Fragrances, “Bergamal,” accessed October 6, 2018, https://www.iff.com/en/smell/online-compendium. ↵

- “Why Smells Are So Difficult To Simulate For Virtual Reality,” UploadVR, March 9, 2017, https://uploadvr.com/why-smell-is-so-difficult-to-simulate-in-vr/. ↵

- Friedrich Nietzsche, On the Genealogy of Morals: A Polemic. By Way of Clarification and Supplement to My Last Book Beyond Good and Evil (Oxford University Press, 2008). ↵

- ? ↵

- Arendt, Hannah, The Human Condition (Chicago: University of Chicago Press, 1998). ↵

- Bertrand Russell, “What Desires Are Politically Important?,” (Nobel Lecture, December 11, 1950). ↵